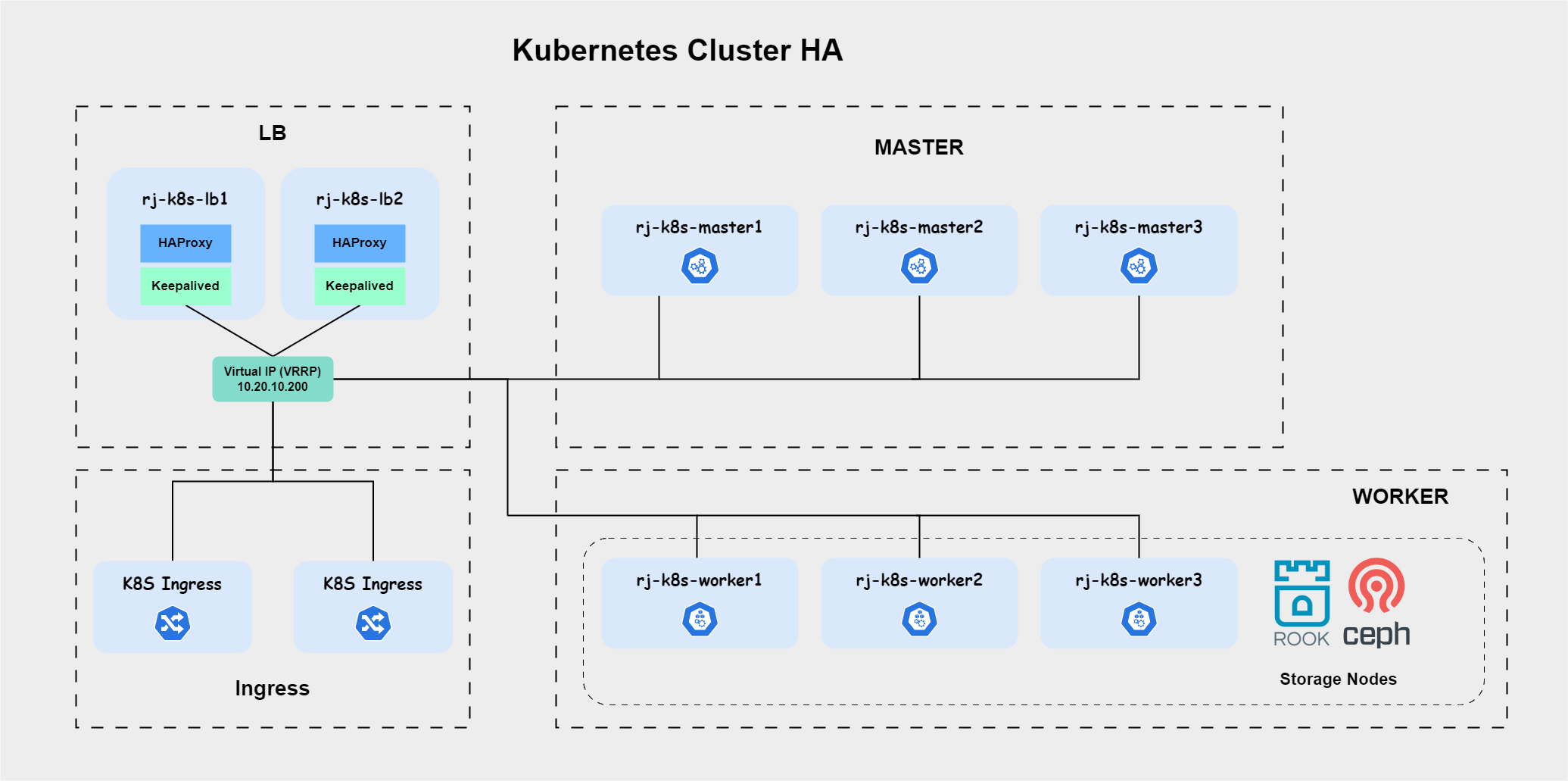

Specification : Kubernetes, ROOK, CEPHLab Topology

You can check installation kubernetes cluster in previous documentation, Kubernetes Cluster Multi-Master High Availability (rjhaikal.my.id)

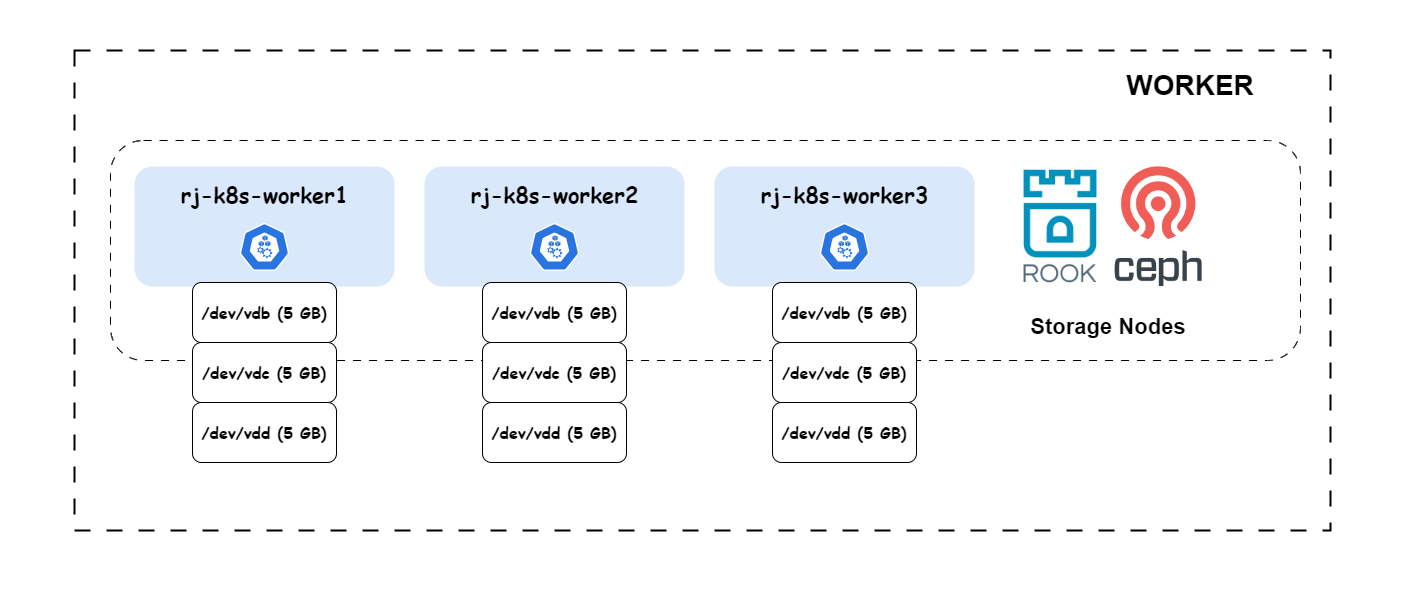

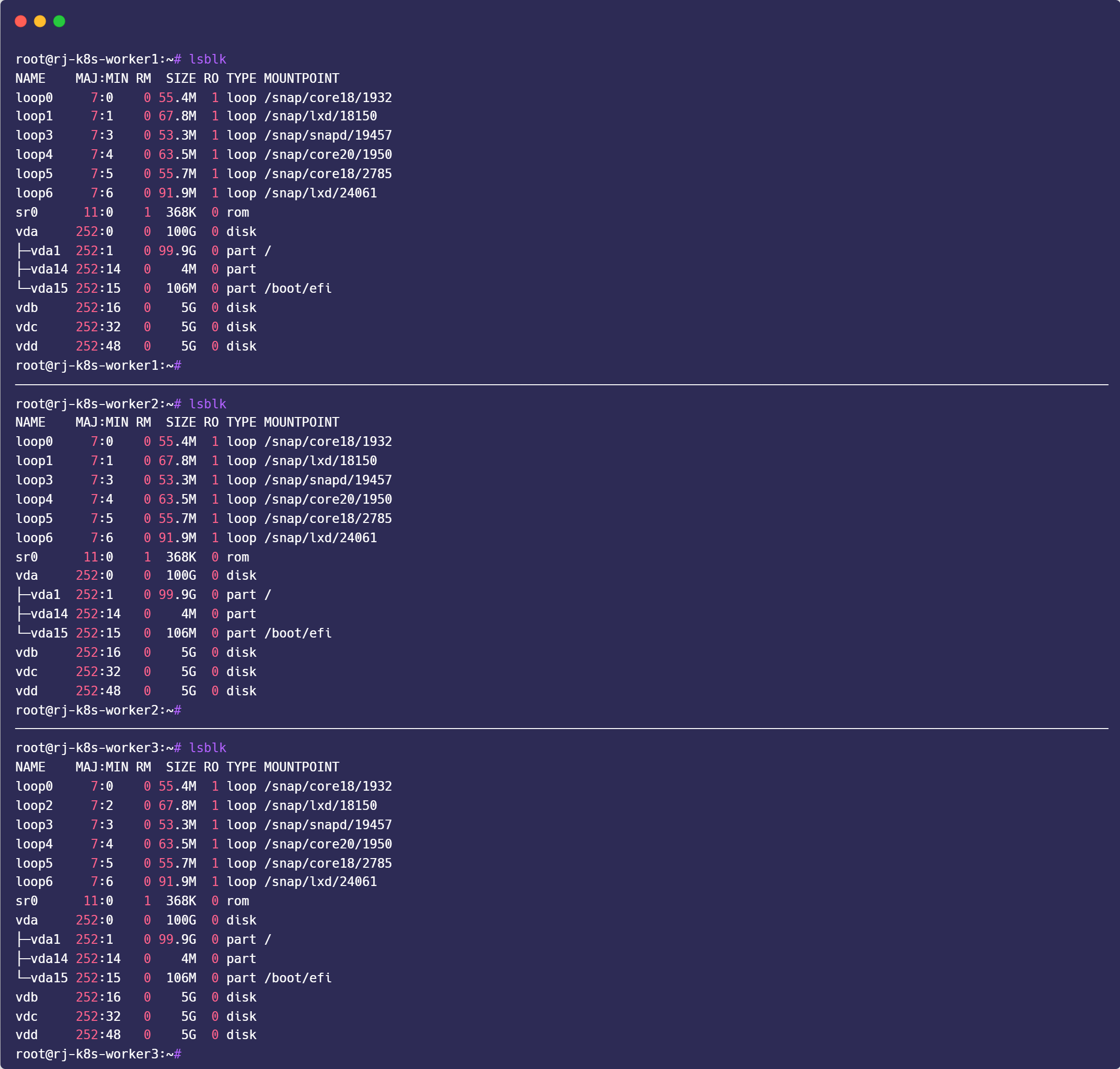

Storages Nodes Disks

I use 3 disks extended (vdb, vdc, vdd) in each of storage-nodes, total 9 disks for rook cluster.

What is Rook / Ceph?

Rook is a free to use and powerful cloud-native open source storage orchestrator for Kubernetes. It provides support for a diverse set of storage solutions to natively integrate with cloud-native environments. More details about the storage solutions currently supported by Rook are captured in the project status section.

Ceph is a distributed storage system that provides file, block and object storage and is deployed in large scale production clusters. Rook will enable us to automate deployment, bootstrapping, configuration, scaling and upgrading Ceph Cluster within a Kubernetes environment. Ceph is widely used in an In-House Infrastructure where managed Storage solution is rarely an option.

Deploy Rook Ceph Storage on Kubernetes Cluster

These are the minimal setup requirements for the deployment of Rook and Ceph Storage on Kubernetes Cluster.

- A Cluster with minimum of three nodes

- Available raw disk devices (with no partitions or formatted filesystems)

- Or Raw partitions (without formatted filesystem)

- Or Persistent Volumes available from a storage class in block mode

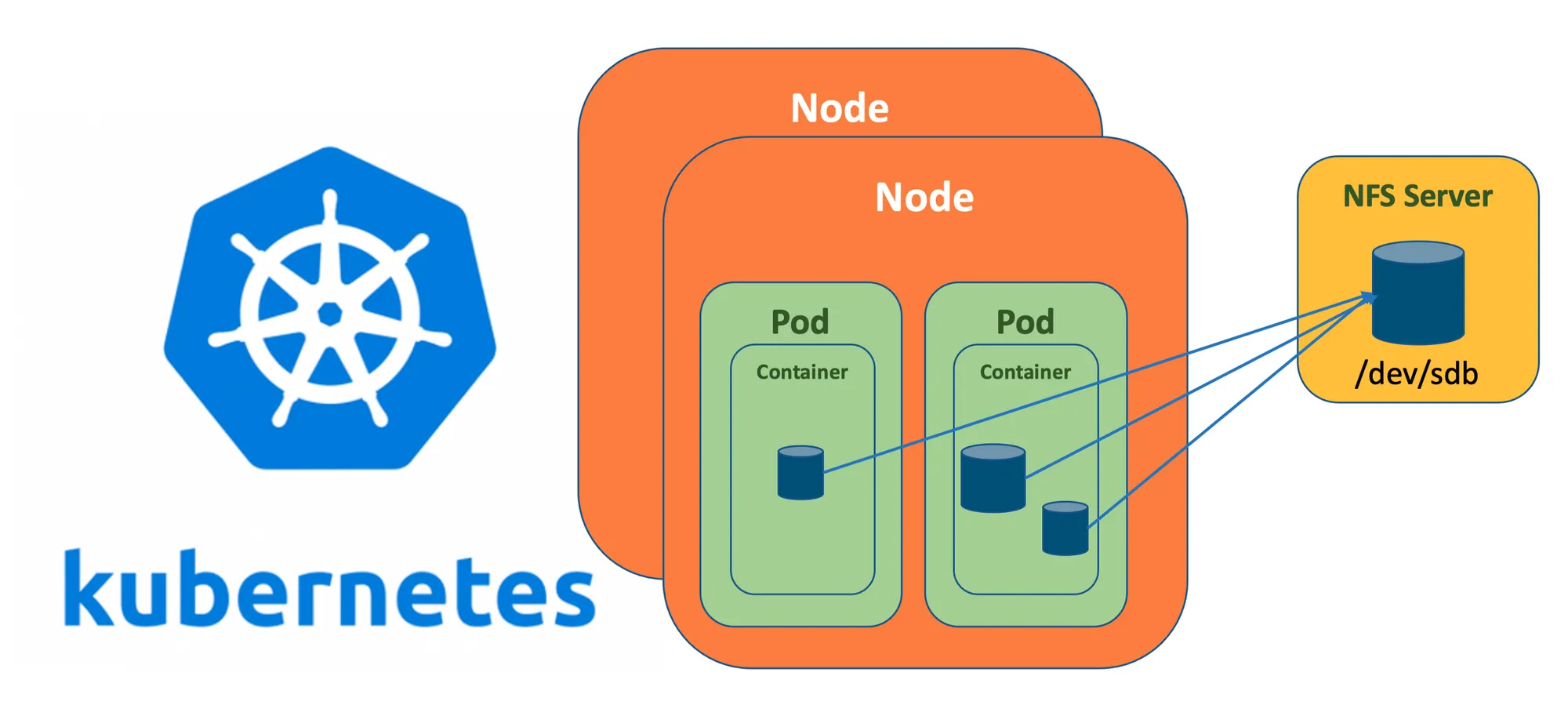

If you need a simple persistent volume storage for your cluster check out my NFS article:

Deploy Rook Storage Orchestrator

1. Clone the rook project from Github using git command.

cd ~/

git clone --single-branch --branch release-1.11 https://github.com/rook/rook.git

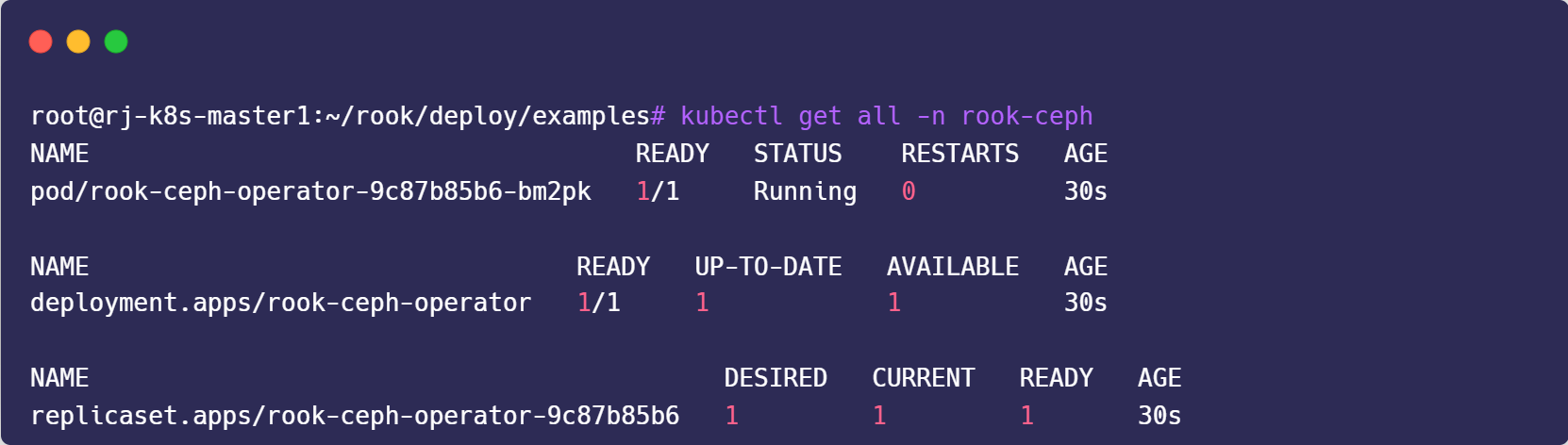

cd rook/deploy/examples/2. Deploy the Rook Operator

kubectl create -f crds.yaml

kubectl create -f common.yaml

kubectl create -f operator.yaml

kubectl get all -n rook-ceph

Create Ceph Cluster on Kubernetes

1. Set default namespace to rook-ceph

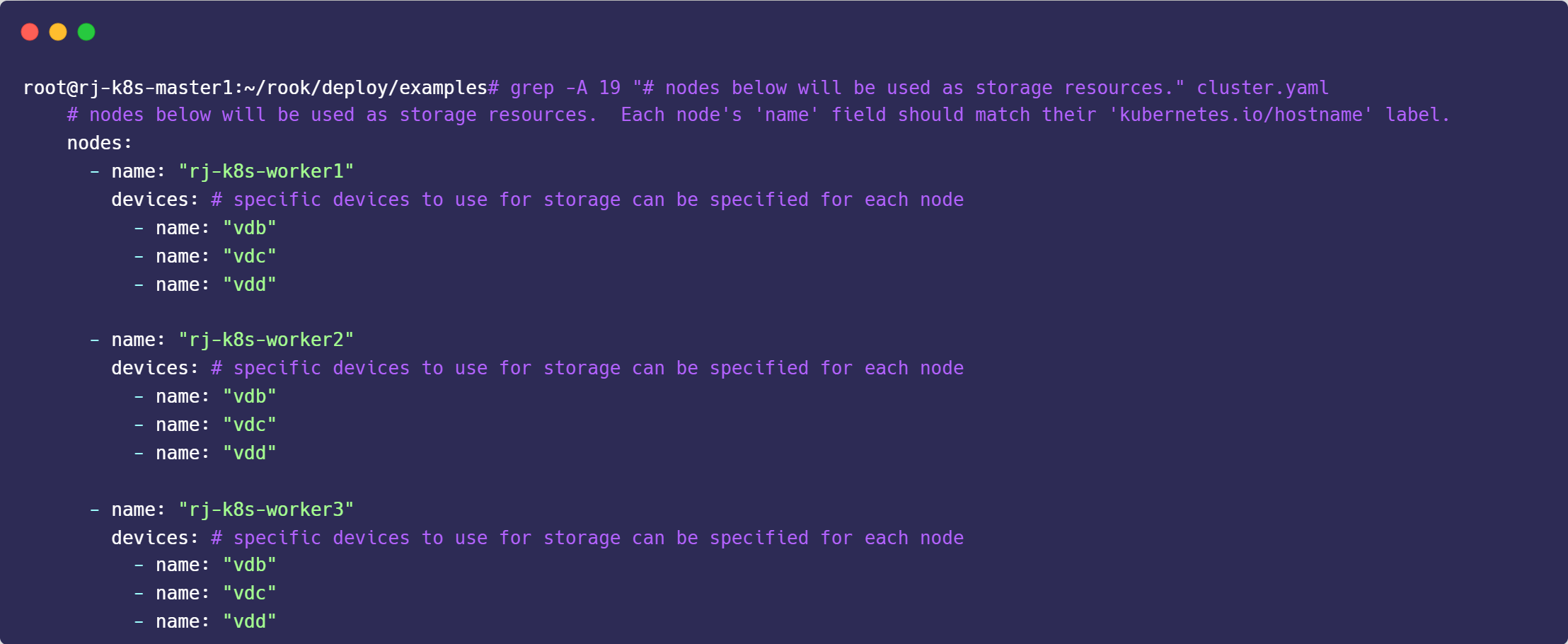

kubectl config set-context --current --namespace rook-ceph2. Edit cluster.yaml

kubectl create -f cluster.yamlNote: Considering that Rook Ceph clusters can discover raw partitions by itself, it is okay to use the default cluster deployment manifest file without any modifications.

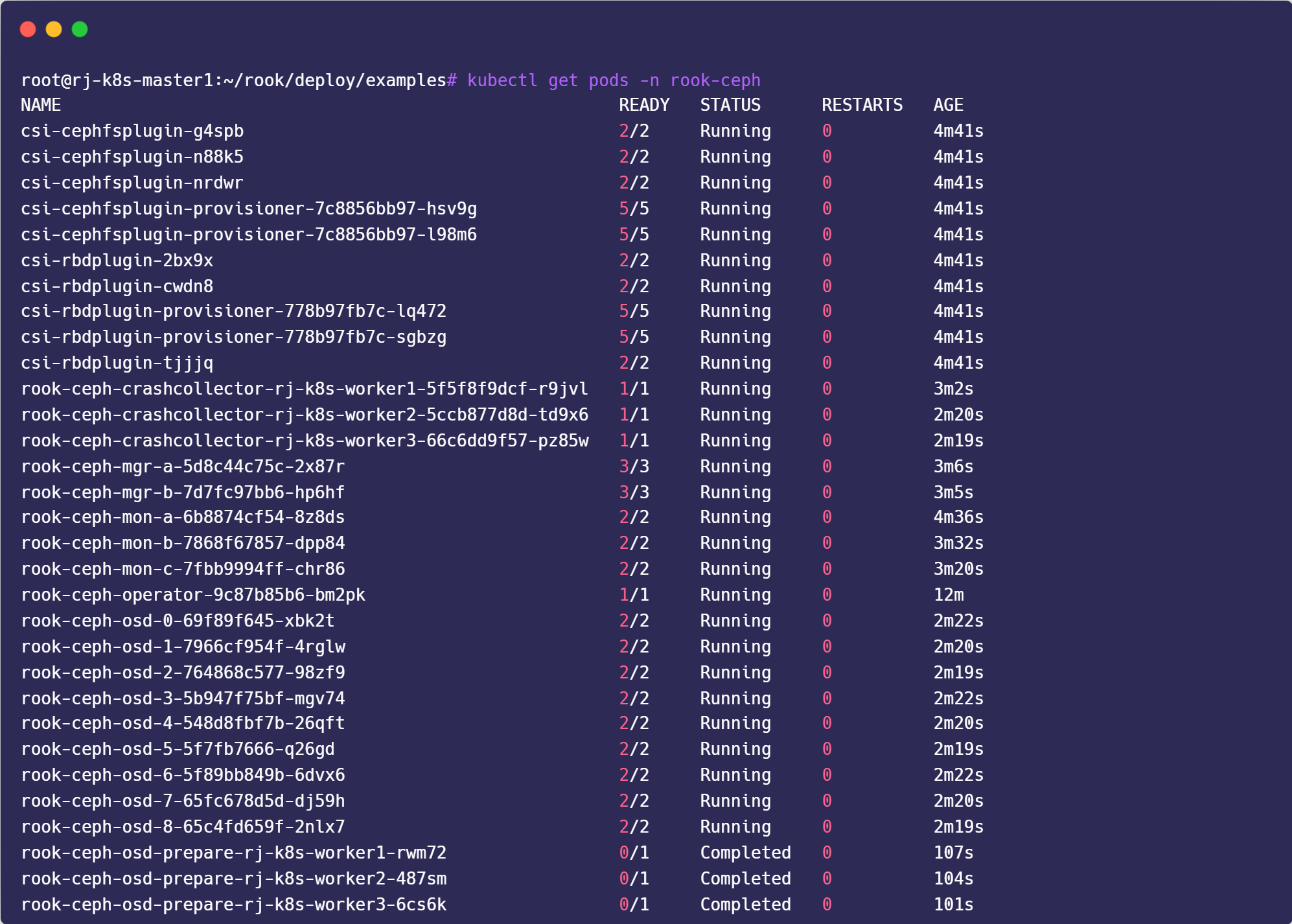

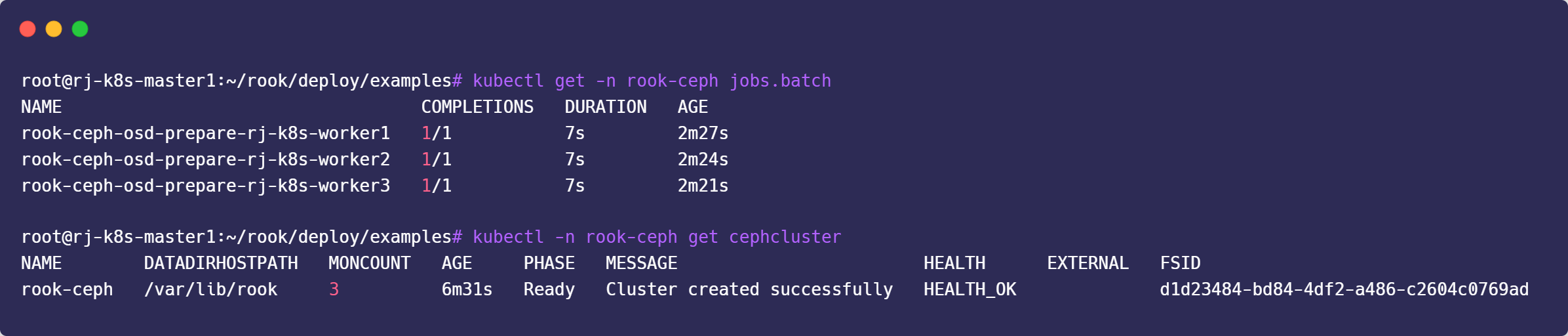

3. View all resources created. It takes some minutes to deploy it, make sure all completed.

kubectl get -n rook-ceph jobs.batch

kubectl -n rook-ceph get cephcluster

Deploy Rook Ceph toolbox in Kubernetes

The Rook Ceph toolbox is a container with common tools used for rook debugging and testing.

1. Apply toolbox.yaml

cd ~

cd rook/cluster/examples/kubernetes/ceph

kubectl apply -f toolbox.yaml2. Connect to the pod using kubectl command with exec option

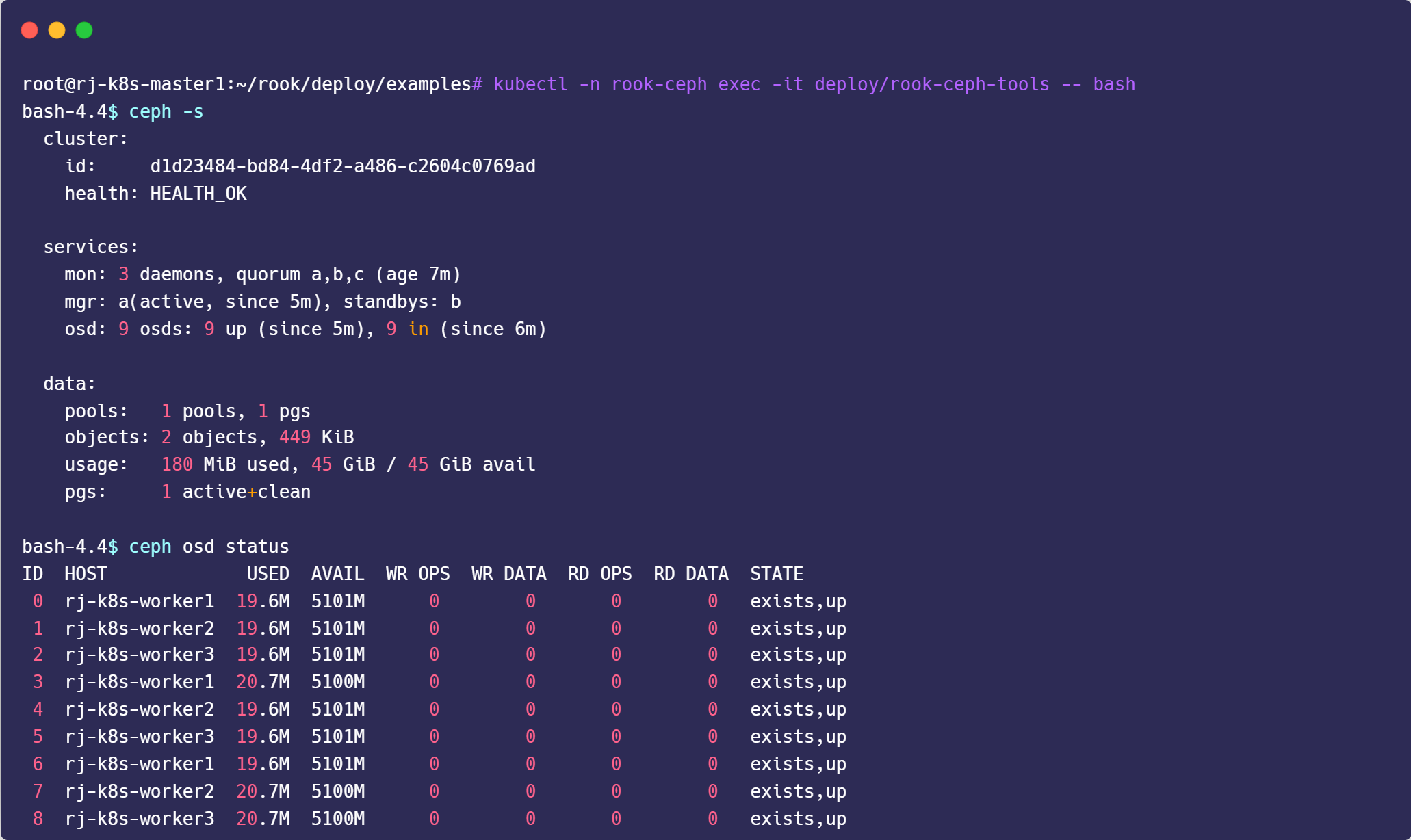

kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- bash3. Check Ceph & OSD current status

ceph -s

ceph osd status

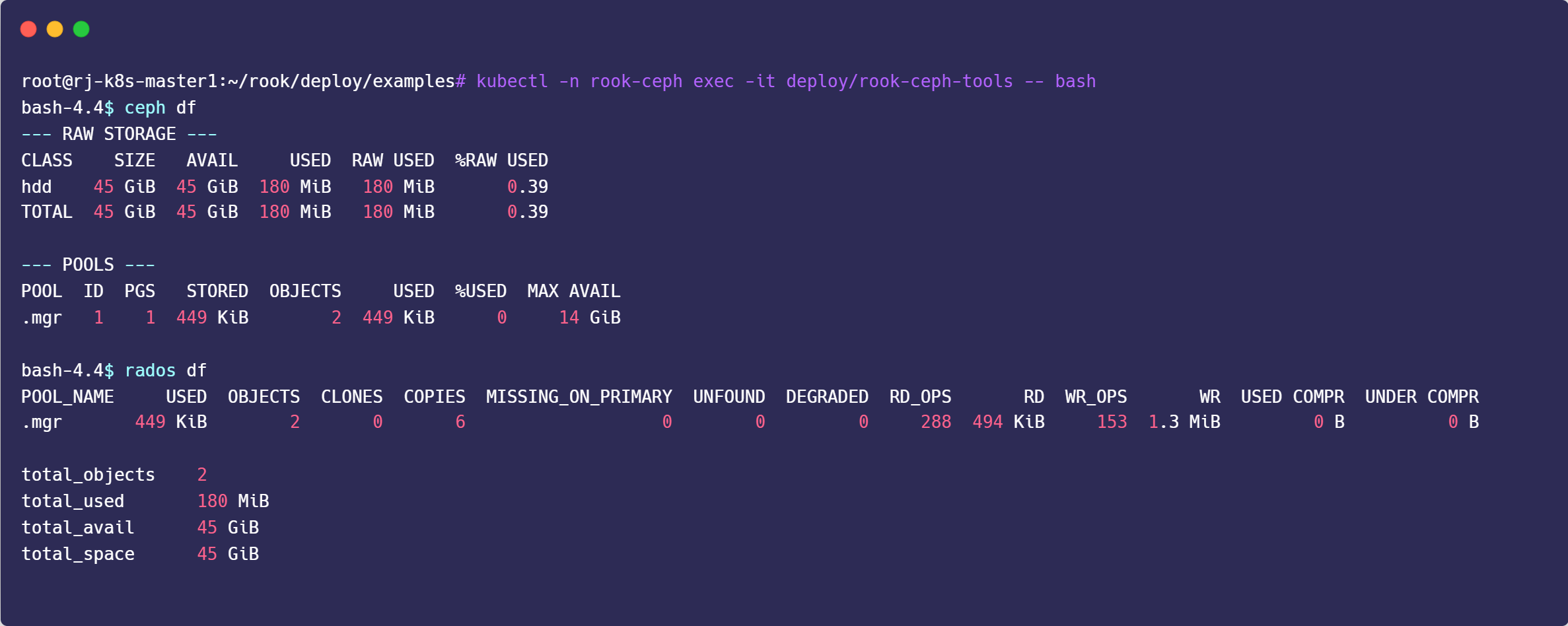

4. Check raw storage and pools

ceph df

rados df

Working with Ceph Storage Modes

Three types of storage exposed by Rook:

- Shared Filesystem: Create a filesystem to be shared across multiple pods (RWX)

- Block: Create block storage to be consumed by a pod (RWO)

- Object: Create an object store that is accessible inside or outside the Kubernetes cluster

All the necessary files for either storage mode are available in rook/cluster/examples/kubernetes/ceph/ directory.

cd ~/

cd rook/deploy/examplesCeph Storage - Cephfs

Cephfs is used to enable shared filesystem which can be mounted with read/write permission from multiple pods.

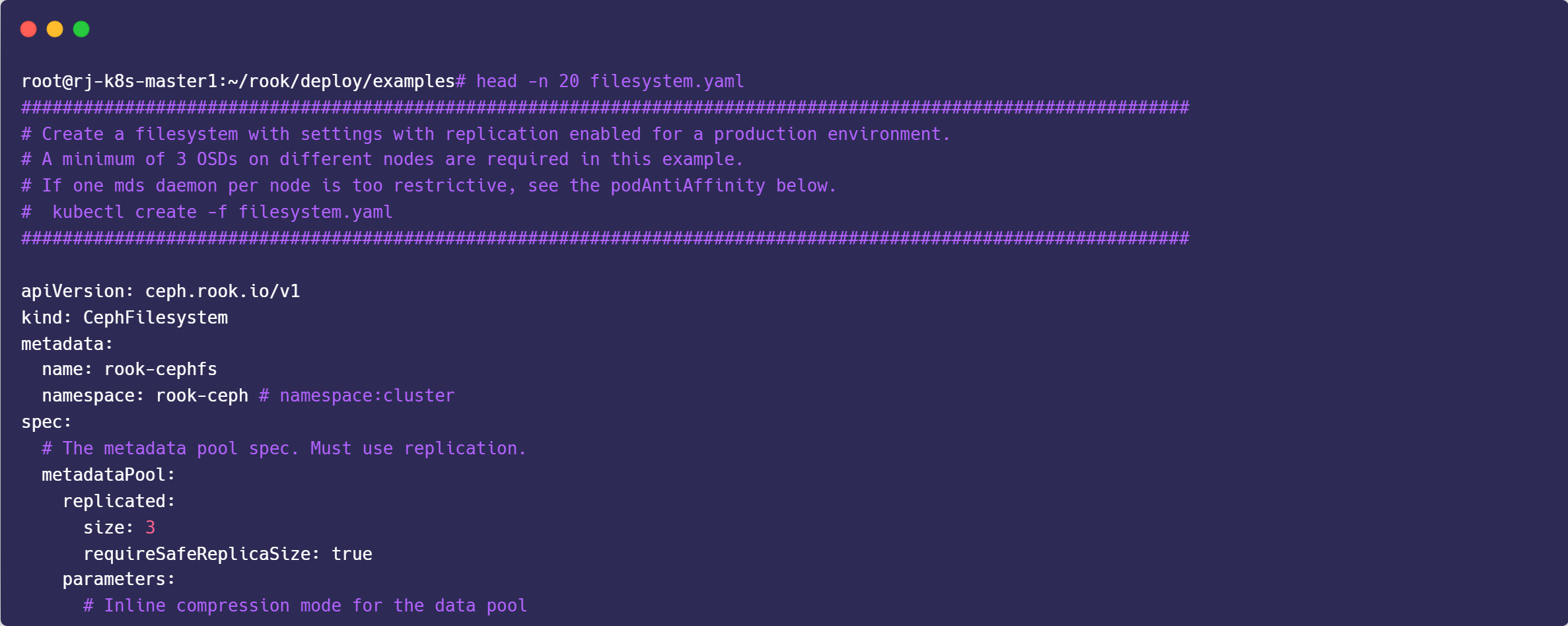

1. Update the filesystem.yaml file by setting data pool name, replication size etc.

vim filesystem.yaml

---

apiVersion: ceph.rook.io/v1

kind: CephFilesystem

metadata:

name: rook-cephfs

namespace: rook-ceph # namespace:cluster

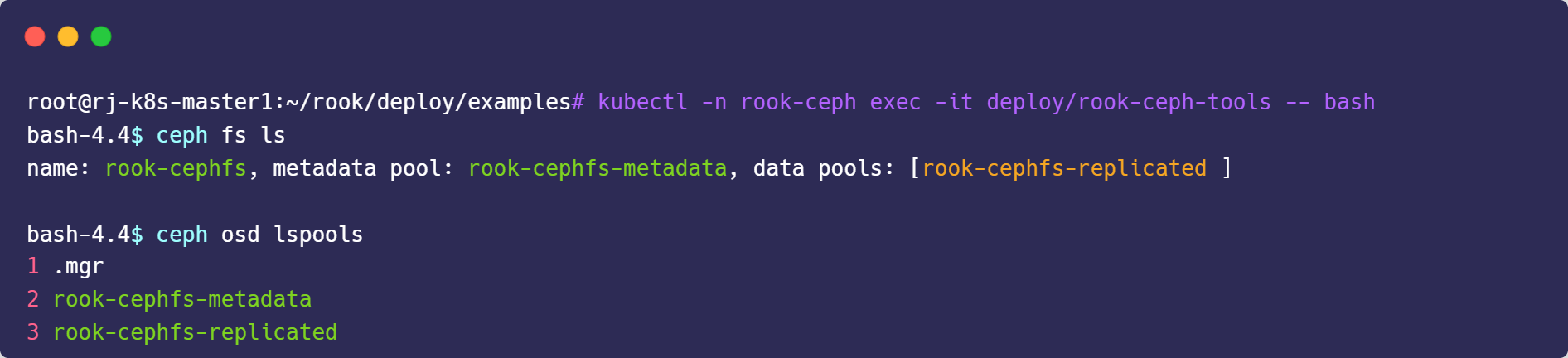

kubectl create -f filesystem.yaml2. Access Rook toolbox pod and check if metadata and data pools are created.

kubectl -n rook-ceph exec -it deploy/rook-ceph-tools -- bash

ceph fs ls

ceph osd lspools

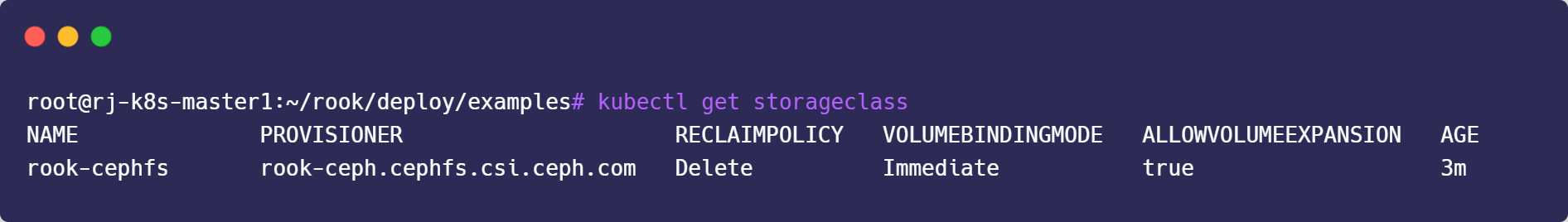

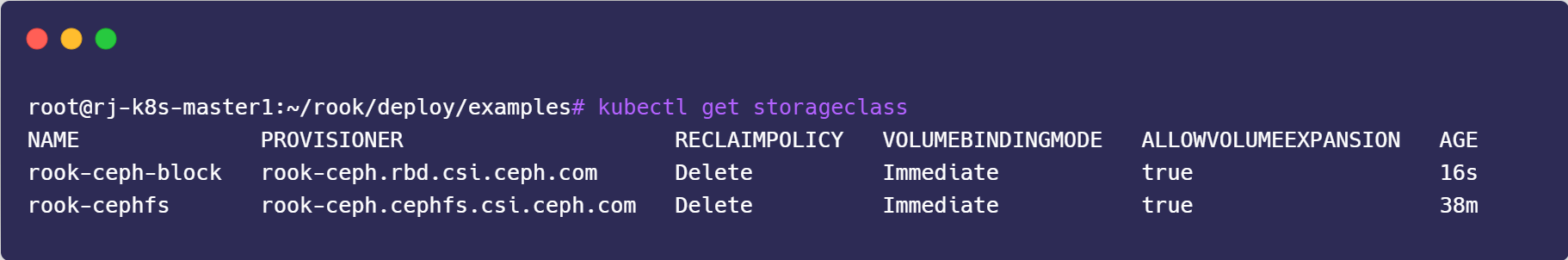

3. Create storageclass for cephfs

sudo nano csi/cephfs/storageclass.yaml

---

parameters:

# clusterID is the namespace where the rook cluster is running

# If you change this namespace, also change the namespace below where the secret namespaces are defined

clusterID: rook-ceph # namespace:cluster

# CephFS filesystem name into which the volume shall be created

fsName: rook-cephfs

# Ceph pool into which the volume shall be created

# Required for provisionVolume: "true"

pool: rook-cephfs-replicated

kubectl create -f csi/cephfs/storageclass.yaml

kubectl get sc

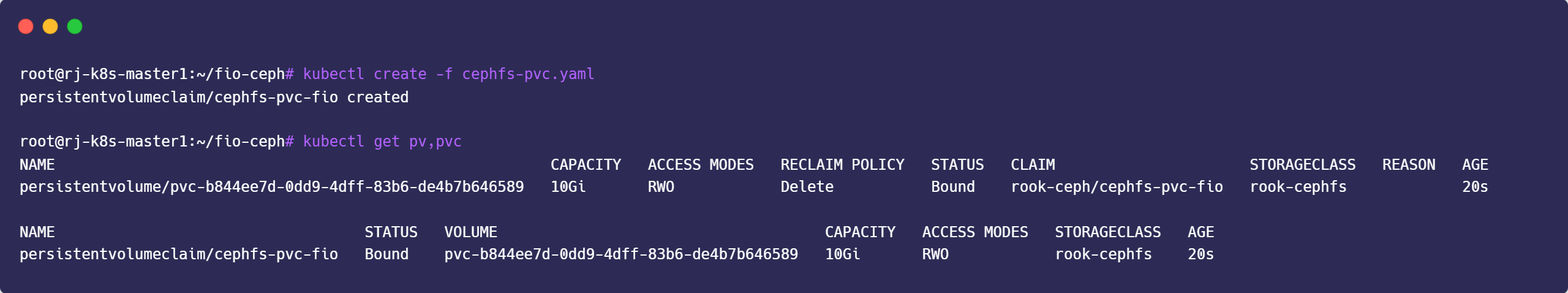

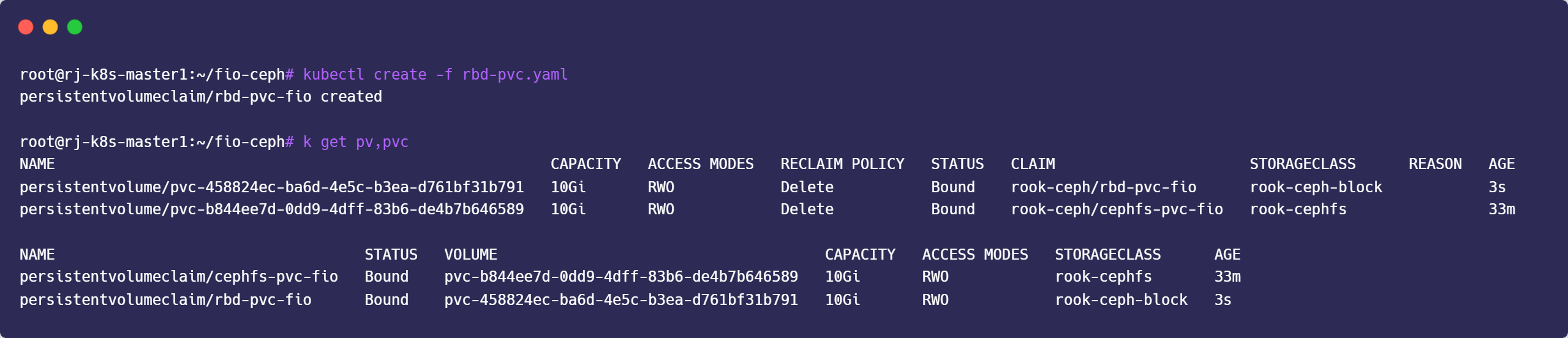

4. Create Cephfs PVC

cat<<EOF > cephfs-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cephfs-pvc-fio

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: rook-cephfs

EOFkubectl create -f cephfs-pvc.yaml

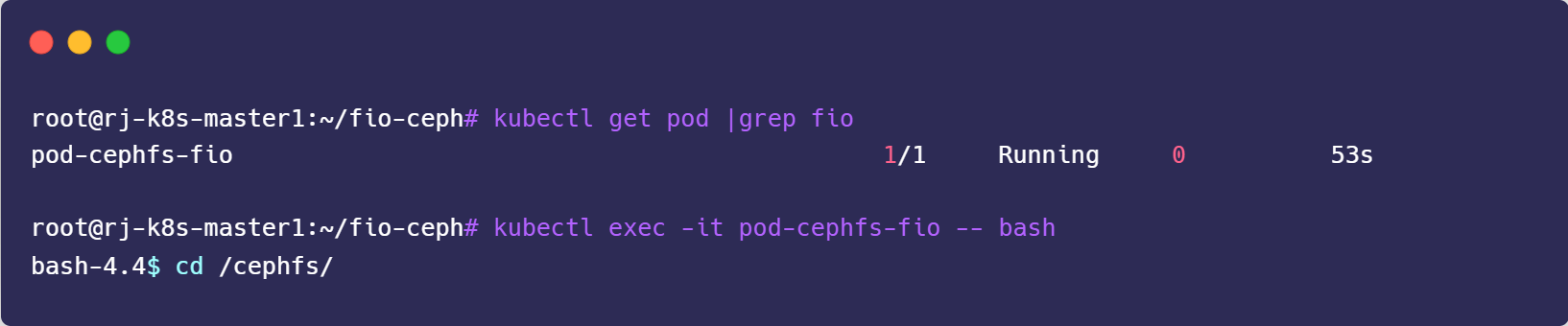

5. Create Fio Pod for Benchmark Mount to cephfs-pvc-fio

cat <<EOF > cephfs-fio-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: pod-cephfs-fio

spec:

containers:

- name: pod-cephfs-fio

image: vineethac/fio_image

command: [ "/bin/bash", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

imagePullPolicy: IfNotPresent

volumeMounts:

- name: cephfs-pvc-fio

mountPath: /cephfs

volumes:

- name: cephfs-pvc-fio

persistentVolumeClaim:

claimName: cephfs-pvc-fio

readOnly: false

EOFkubectl create -f cephfs-fio-pod.yaml

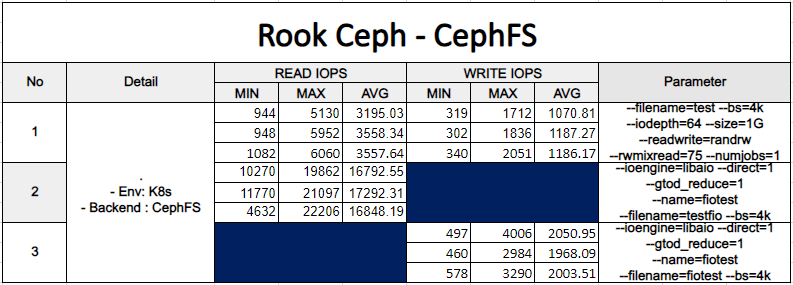

6. Test Fio Benchmark

cd /cephfs

for i in {1..3}; do fio --randrepeat=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=test --bs=4k --iodepth=64 --size=1G --readwrite=randrw --rwmixread=75 --numjobs=1 --time_based --runtime=90s --ramp_time=2s --invalidate=1 --verify=0 --verify_fatal=0 --group_reporting; done

for i in {1..3}; do fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=fiotest --filename=testfio --bs=4k --iodepth=64 --size=1G --readwrite=randread; done

for i in {1..3}; do fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=fiotest --filename=fiotest --bs=4k --iodepth=64 --size=1G --readwrite=randwrite; done7. Benchmark Result

Ceph Storage - RBD

Block storage allows a single pod to mount storage (RWO mode).

1. Before Rook can provision storage, a StorageClass and CephBlockPool need to be created.

kubectl create -f csi/rbd/storageclass.yaml

2. Create RBD PVC

cat<<EOF > rbd-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-fio

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: rook-ceph-block

EOFkubectl create -f rbd-pvc.yaml

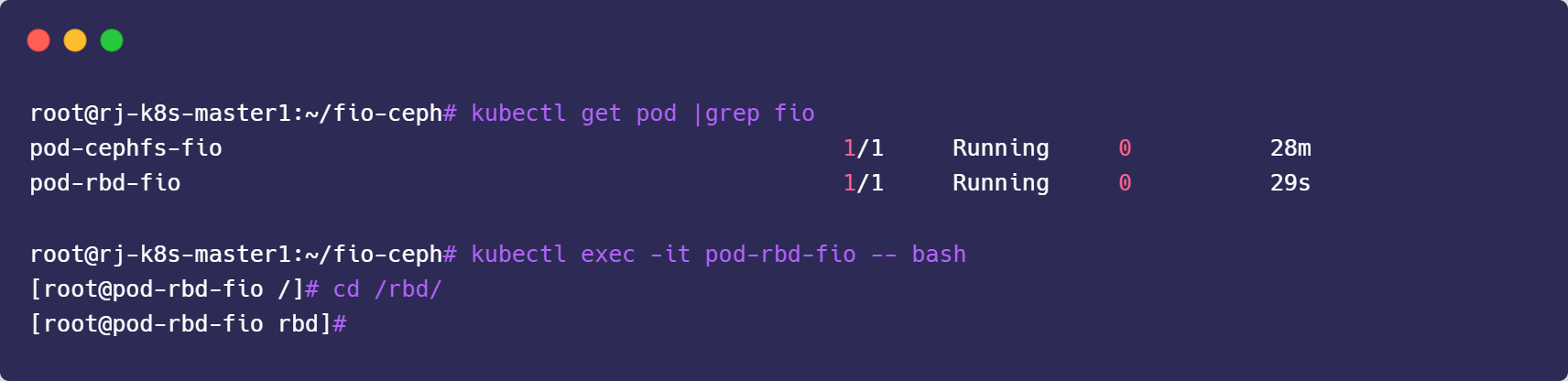

3. Create Fio Pod for Benchmark Mount to rbd-pvc-fio

cat<<EOF > rbd-fio-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: pod-rbd-fio

spec:

containers:

- name: pod-rbd-fio

image: vineethac/fio_image

command: [ "/bin/bash", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

imagePullPolicy: IfNotPresent

volumeMounts:

- name: rbd-pvc-fio

mountPath: /rbd

volumes:

- name: rbd-pvc-fio

persistentVolumeClaim:

claimName: rbd-pvc-fio

readOnly: false

EOFkubectl create -f rbd-fio-pod.yaml

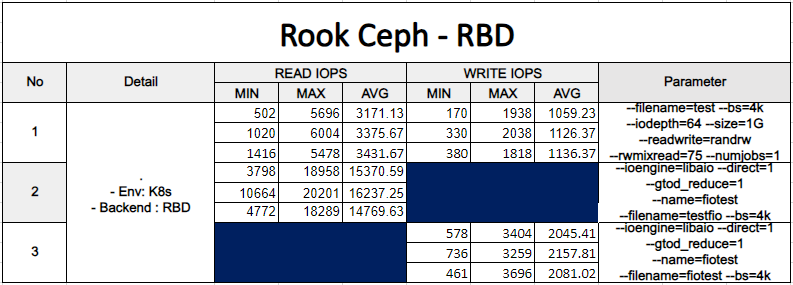

4. Test Fio Benchmark

cd /rbd/

for i in {1..3}; do fio --randrepeat=0 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=test --filename=test --bs=4k --iodepth=64 --size=1G --readwrite=randrw --rwmixread=75 --numjobs=1 --time_based --runtime=90s --ramp_time=2s --invalidate=1 --verify=0 --verify_fatal=0 --group_reporting; done

for i in {1..3}; do fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=fiotest --filename=testfio --bs=4k --iodepth=64 --size=1G --readwrite=randread; done

for i in {1..3}; do fio --randrepeat=1 --ioengine=libaio --direct=1 --gtod_reduce=1 --name=fiotest --filename=fiotest --bs=4k --iodepth=64 --size=1G --readwrite=randwrite; done5. Benchmark Result

Tearing Down the Ceph Cluster (Reference only)

If you want to tear down the cluster and bring up a new one, be aware of the following resources that will need to be cleaned up:

rook-cephnamespace: The Rook operator and cluster created byoperator.yamlandcluster.yaml(the cluster CRD)/var/lib/rook: Path on each host in the cluster where configuration is cached by the ceph mons and osds

All CRDs in the cluster.

root@rj-k8s-master1:~# kubectl get crds

NAME CREATED AT

bgpconfigurations.crd.projectcalico.org 2023-06-23T09:32:45Z

bgpfilters.crd.projectcalico.org 2023-06-23T09:32:45Z

bgppeers.crd.projectcalico.org 2023-06-23T09:32:45Z

blockaffinities.crd.projectcalico.org 2023-06-23T09:32:45Z

caliconodestatuses.crd.projectcalico.org 2023-06-23T09:32:45Z

cephblockpoolradosnamespaces.ceph.rook.io 2023-06-26T03:22:19Z

cephblockpools.ceph.rook.io 2023-06-26T03:22:19Z

cephbucketnotifications.ceph.rook.io 2023-06-26T03:22:19Z

cephbuckettopics.ceph.rook.io 2023-06-26T03:22:19Z

cephclients.ceph.rook.io 2023-06-26T03:22:19Z

cephclusters.ceph.rook.io 2023-06-26T03:22:19Z

cephfilesystemmirrors.ceph.rook.io 2023-06-26T03:22:19Z

cephfilesystems.ceph.rook.io 2023-06-26T03:22:19Z

cephfilesystemsubvolumegroups.ceph.rook.io 2023-06-26T03:22:19Z

cephnfses.ceph.rook.io 2023-06-26T03:22:19Z

cephobjectrealms.ceph.rook.io 2023-06-26T03:22:19Z

cephobjectstores.ceph.rook.io 2023-06-26T03:22:20Z

cephobjectstoreusers.ceph.rook.io 2023-06-26T03:22:20Z

cephobjectzonegroups.ceph.rook.io 2023-06-26T03:22:20Z

cephobjectzones.ceph.rook.io 2023-06-26T03:22:20Z

cephrbdmirrors.ceph.rook.io 2023-06-26T03:22:20Z

clusterinformations.crd.projectcalico.org 2023-06-23T09:32:45Z

felixconfigurations.crd.projectcalico.org 2023-06-23T09:32:45Z

globalnetworkpolicies.crd.projectcalico.org 2023-06-23T09:32:45Z

globalnetworksets.crd.projectcalico.org 2023-06-23T09:32:45Z

hostendpoints.crd.projectcalico.org 2023-06-23T09:32:45Z

ipamblocks.crd.projectcalico.org 2023-06-23T09:32:45Z

ipamconfigs.crd.projectcalico.org 2023-06-23T09:32:45Z

ipamhandles.crd.projectcalico.org 2023-06-23T09:32:45Z

ippools.crd.projectcalico.org 2023-06-23T09:32:45Z

ipreservations.crd.projectcalico.org 2023-06-23T09:32:46Z

kubecontrollersconfigurations.crd.projectcalico.org 2023-06-23T09:32:46Z

networkpolicies.crd.projectcalico.org 2023-06-23T09:32:46Z

networksets.crd.projectcalico.org 2023-06-23T09:32:46Z

objectbucketclaims.objectbucket.io 2023-06-26T03:22:20Z

objectbuckets.objectbucket.io 2023-06-26T03:22:20ZEdit the CephCluster and add the cleanupPolicy

kubectl -n rook-ceph patch cephcluster rook-ceph --type merge -p '{"spec":{"cleanupPolicy":{"confirmation":"yes-really-destroy-data"}}}'Delete block storage and file storage:

cd ~/

cd rook/deploy/examples

kubectl delete -n rook-ceph cephblockpool replicapool

kubectl delete -f csi/rbd/storageclass.yaml

kubectl delete -f filesystem.yaml

kubectl delete -f csi/cephfs/storageclass.yamlDelete the CephCluster Custom Resource:

[root@k8s-bastion ~]# kubectl -n rook-ceph delete cephcluster rook-ceph

cephcluster.ceph.rook.io "rook-ceph" deletedVerify that the cluster CR has been deleted before continuing to the next step.

kubectl -n rook-ceph get cephclusterDelete the Operator and related Resources

kubectl delete -f operator.yaml

kubectl delete -f common.yaml

kubectl delete -f crds.yamlZapping Devices

# Set the raw disk / raw partition path

DISKS="/dev/vdb /dev/vdc /dev/vdd"

# Zap the disk to a fresh, usable state (zap-all is important, b/c MBR has to be clean)

# Install: yum install gdisk -y Or apt install gdisk

for DISK in $DISKS; do

sgdisk --zap-all $DISK

done

# Clean hdds with dd

for DISK in $DISKS; do

dd if=/dev/zero of="$DISK" bs=1M count=100 oflag=direct,dsync

done

# Clean disks such as ssd with blkdiscard instead of dd

for DISK in $DISKS; do

blkdiscard $DISK

done

# Remove mapped devices from ceph-volume setup

ls /dev/mapper/ceph-* | xargs -I% -- dmsetup remove %

# Remove ceph-<UUID> directories

rm -rf /dev/ceph-*

rm -rf /dev/mapper/ceph--*

# Inform the OS of partition table changes

for DISK in $DISKS; do

partprobe $DISK

doneRemoving the Cluster CRD Finalizer:

for CRD in $(kubectl get crd -n rook-ceph | awk '/ceph.rook.io/ {print $1}'); do

kubectl get -n rook-ceph "$CRD" -o name | \

xargs -I {} kubectl patch -n rook-ceph {} --type merge -p '{"metadata":{"finalizers": [null]}}'

doneIf the namespace is still stuck in Terminating state as seen in the command below:

$ kubectl get ns rook-ceph

NAME STATUS AGE

rook-ceph Terminating 5hYou can check which resources are holding up the deletion and remove the finalizers and delete those resources.

kubectl api-resources --verbs=list --namespaced -o name | xargs -n 1 kubectl get --show-kind --ignore-not-found -n rook-cephFrom my output the resource is configmap named rook-ceph-mon-endpoints:

NAME DATA AGE

configmap/rook-ceph-mon-endpoints 4 23hDelete the resource manually:

# kubectl delete configmap/rook-ceph-mon-endpoints -n rook-ceph

configmap "rook-ceph-mon-endpoints" deletedReference