Introduction

OVN (Open Virtual Network) is a series of daemons for the Open vSwitch that translate virtual network configurations into OpenFlow. It provides virtual networking capabilities for any type of workload on a virtualized platform (virtual machines and containers) using the same API.

Unlike Open vSwitch, OVN operates at a higher abstraction level, managing logical routers and switches instead of individual flows. You can find more information in the OVN architecture documentation.

In this post, I’ll guide you through attaching multiple networks to KubeVirt VMs. VMs under the same subnet will be able to access external networks and communicate with each other, creating a seamless, interconnected virtual environment. Using this SDN approach, which uses virtual networking infrastructure: thus, it is not required to provision VLANs or other physical network resources.

Requirements

To run this demo, you will need a Kubernetes cluster with the following components installed:

- OVN-Kubernetes. Installation Reference

- multus-cni. Installation Reference

- KubeVirt. Installation Reference. Install virtctl at the same time to connect to the VM after it is created

Demo

1. Define the overlay network

Provision the following yaml to define the overlay which will configure the secondary attachment for the KubeVirt VMs. Please refer to the OVN-Kubernetes user documentation for details into each of the knobs.

cat <<EOF | kubectl apply -f -

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: kube-ovn-att1

namespace: default

spec:

config: '{

"cniVersion": "0.3.0",

"type": "kube-ovn",

"server_socket": "/run/openvswitch/kube-ovn-daemon.sock",

"provider": "kube-ovn-att1.default.ovn"

}'

EOFkubectl get network-attachment-definitions.k8s.cni.cncf.io kube-ovn-att1

NAME AGE

kube-ovn-att1 5m

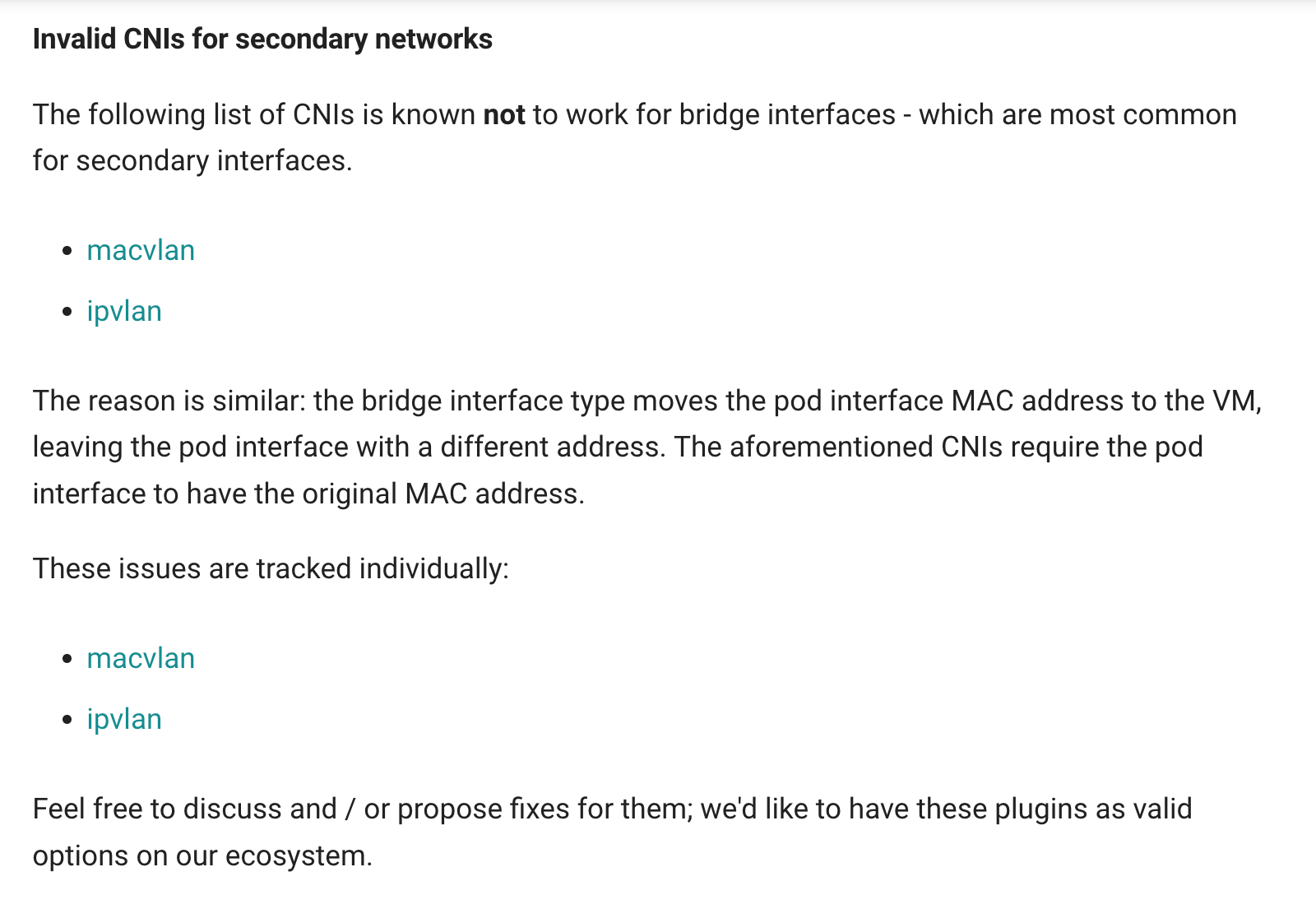

When using KubeVirt with Multus to expose virtual machines to the external network, it is important to configure a bridge interface rather than using macvlan or ipvlan.

The KubeVirt documentation indicates that these CNIs do not work for bridge interfaces, which are commonly used for secondary network configurations. This limitation arises because the bridge interface type transfers the pod's MAC address to the VM, while macvlan and ipvlan CNIs require the pod interface to retain its original MAC address. As a result, they are marked as invalid in this context.

2. Create the Corresponding Subnet

cat <<EOF | kubectl apply -f -

apiVersion: kubeovn.io/v1

kind: Subnet

metadata:

name: kubeovn-subnet

spec:

protocol: IPv4

provider: kube-ovn-att1.default.ovn

cidrBlock: 172.16.0.0/16

gateway: 172.16.0.1

excludeIps:

- 172.16.0.1..172.16.0.10

EOFThe provider must be the same as the provider defined in Attach in Step 1. When an IP address is assigned to an additional NIC on a VM, the subnet is associated with the subnet based on the provider.

The available IP domains, gateways, and excluded IP ranges can be set according to the actual situation.

kubectl get subnet kubeovn-subnet

NAME PROVIDER VPC PROTOCOL CIDR PRIVATE NAT DEFAULT GATEWAYTYPE V4USED V4AVAILABLE V6USED V6AVAILABLE EXCLUDEIPS U2OINTERCONNECTIONIP

kubeovn-subnet kube-ovn-att1.default.ovn ovn-cluster IPv4 172.16.0.0/16 false false false distributed 0 65524 0 0 ["172.16.0.1..172.16.0.10"]3. Create 2 VMs

Create multiple Ubuntu virtual machines with the annotation k8s.v1.cni.cncf.io/networks: kube-ovn-att1 applied at the VirtualMachine level, allowing the VMs to connect to the specified network. Additionally, at the template level, use the annotation kubevirt.io/allow-pod-bridge-network-live-migration: "" to enable live migration for VMs using a pod bridge network.

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: rj-ubuntu-1

annotations:

k8s.v1.cni.cncf.io/networks: kube-ovn-att1

spec:

runStrategy: Always

template:

metadata:

annotations:

kubevirt.io/allow-pod-bridge-network-live-migration: ""

labels:

kubevirt.io/size: small

kubevirt.io/domain: rj-ubuntu-1

spec:

domain:

cpu:

cores: 2

devices:

disks:

- name: disk0

disk:

bus: virtio

- name: cloudinit

cdrom:

bus: sata

readonly: true

rng: {}

interfaces:

- name: default

bridge: {}

- name: secondary

bridge: {}

resources:

requests:

memory: 1024Mi

terminationGracePeriodSeconds: 15

networks:

- name: default

pod: {}

- name: secondary

multus:

networkName: default/kube-ovn-att1

volumes:

- name: disk0

dataVolume:

name: rj-ubuntu-1

- name: cloudinit

cloudInitNoCloud:

userData: |-

#cloud-config

chpasswd:

list: |

ubuntu:ubuntu

expire: False

disable_root: false

ssh_pwauth: True

ssh_authorized_keys:

- ssh-rsa AAAA...

networkData: |

version: 2

ethernets:

enp1s0:

dhcp4: true

enp2s0:

addresses: [ 172.16.0.11/24 ]

dataVolumeTemplates:

- metadata:

name: rj-ubuntu-1

spec:

storage:

storageClassName: storage-nvme-c1

# volumeMode: Filesystem

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

source:

registry:

url: docker://quay.io/containerdisks/ubuntu:22.04

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: rj-ubuntu-2

annotations:

k8s.v1.cni.cncf.io/networks: kube-ovn-att1

spec:

runStrategy: Always

template:

metadata:

annotations:

kubevirt.io/allow-pod-bridge-network-live-migration: ""

labels:

kubevirt.io/size: small

kubevirt.io/domain: rj-ubuntu-2

spec:

domain:

cpu:

cores: 2

devices:

disks:

- name: disk0

disk:

bus: virtio

- name: cloudinit

cdrom:

bus: sata

readonly: true

rng: {}

interfaces:

- name: default

bridge: {}

- name: secondary

bridge: {}

resources:

requests:

memory: 1024Mi

terminationGracePeriodSeconds: 15

networks:

- name: default

pod: {}

- name: secondary

multus:

networkName: default/kube-ovn-att1

volumes:

- name: disk0

dataVolume:

name: rj-ubuntu-2

- name: cloudinit

cloudInitNoCloud:

userData: |-

#cloud-config

chpasswd:

list: |

ubuntu:ubuntu

expire: False

disable_root: false

ssh_pwauth: True

ssh_authorized_keys:

- ssh-rsa AAAA...

networkData: |

version: 2

ethernets:

enp1s0:

dhcp4: true

enp2s0:

addresses: [ 172.16.0.12/24 ]

dataVolumeTemplates:

- metadata:

name: rj-ubuntu-2

spec:

storage:

storageClassName: storage-nvme-c1

# volumeMode: Filesystem

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

source:

registry:

url: docker://quay.io/containerdisks/ubuntu:22.04

kubectl apply -f vm1.yaml -f vm2.yamlMonitor DataVolume Creation using below command

kubectl get dv -wAfter waiting for the VMs to be ready, the VMs status should be as shown below

kubectl get dv,vmi rj-ubuntu-1 rj-ubuntu-2

NAME PHASE PROGRESS RESTARTS AGE

datavolume.cdi.kubevirt.io/rj-ubuntu-1 Succeeded 100.0% 104s

datavolume.cdi.kubevirt.io/rj-ubuntu-2 Succeeded 100.0% 104s

NAME AGE PHASE IP NODENAME READY

virtualmachineinstance.kubevirt.io/rj-ubuntu-1 39s Running 10.240.169.40 raja-k3s-agent2 True

virtualmachineinstance.kubevirt.io/rj-ubuntu-2 42s Running 10.240.169.39 raja-k3s-agent3 True4. Access VMs & Verify connectivity

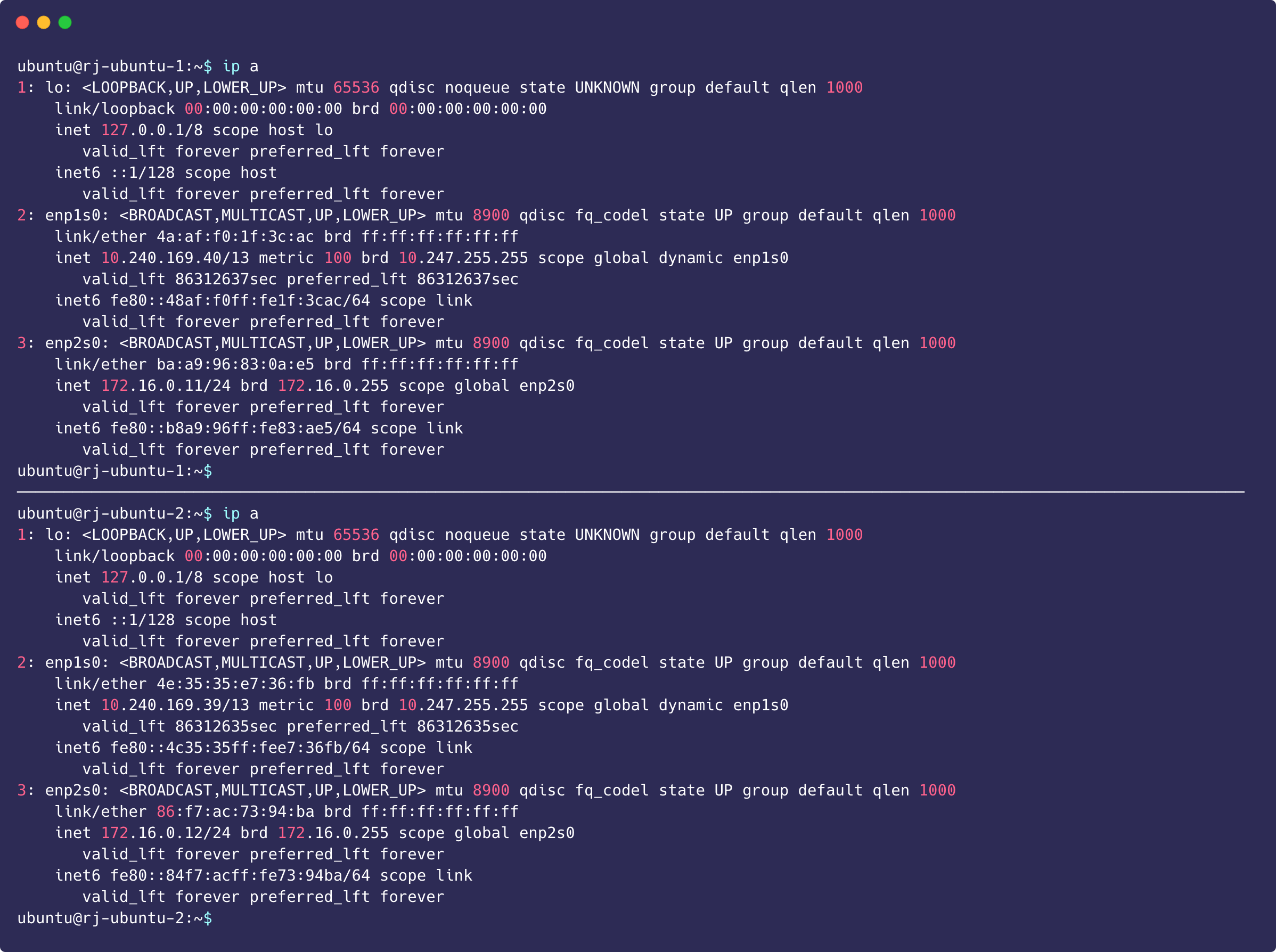

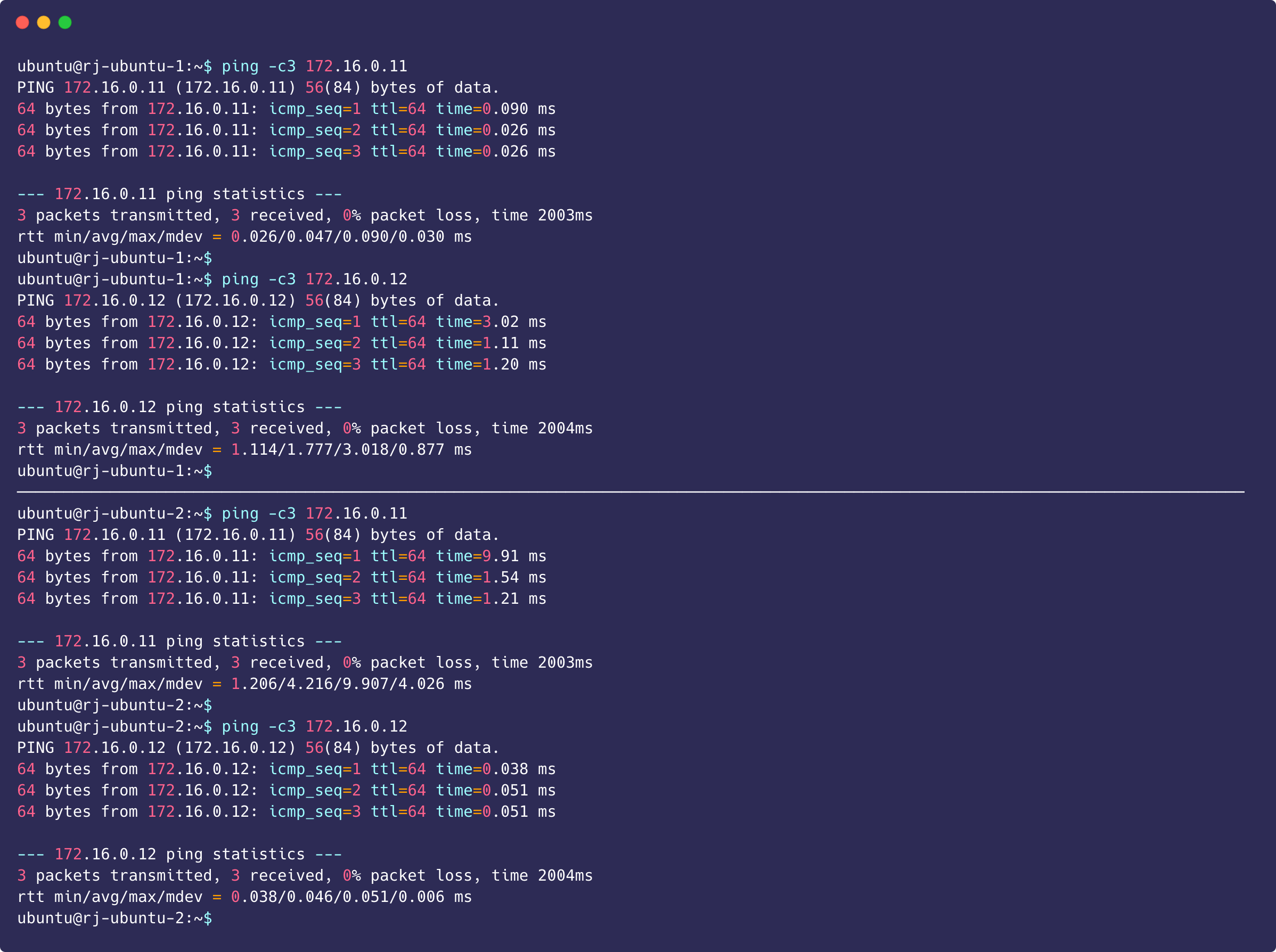

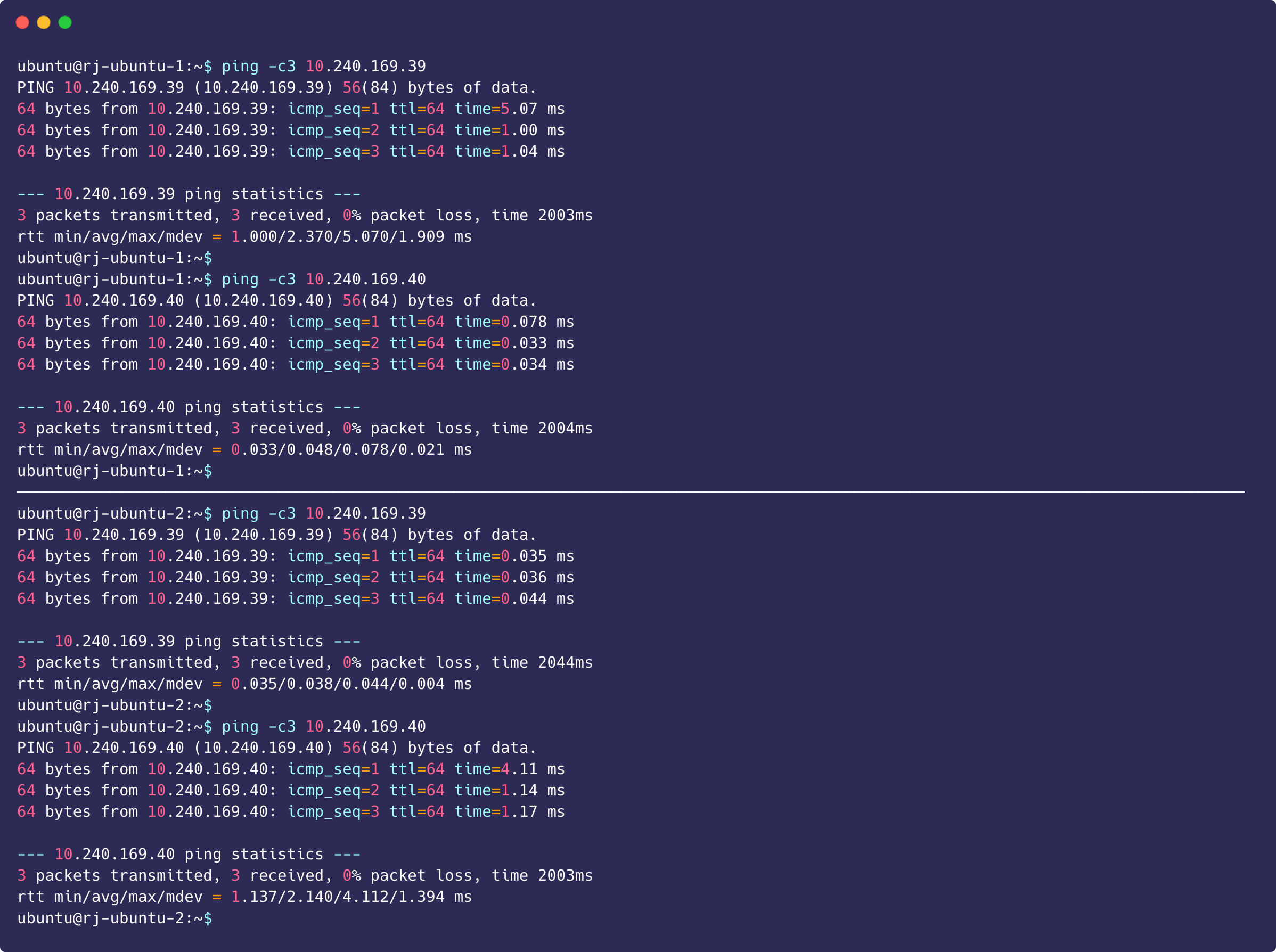

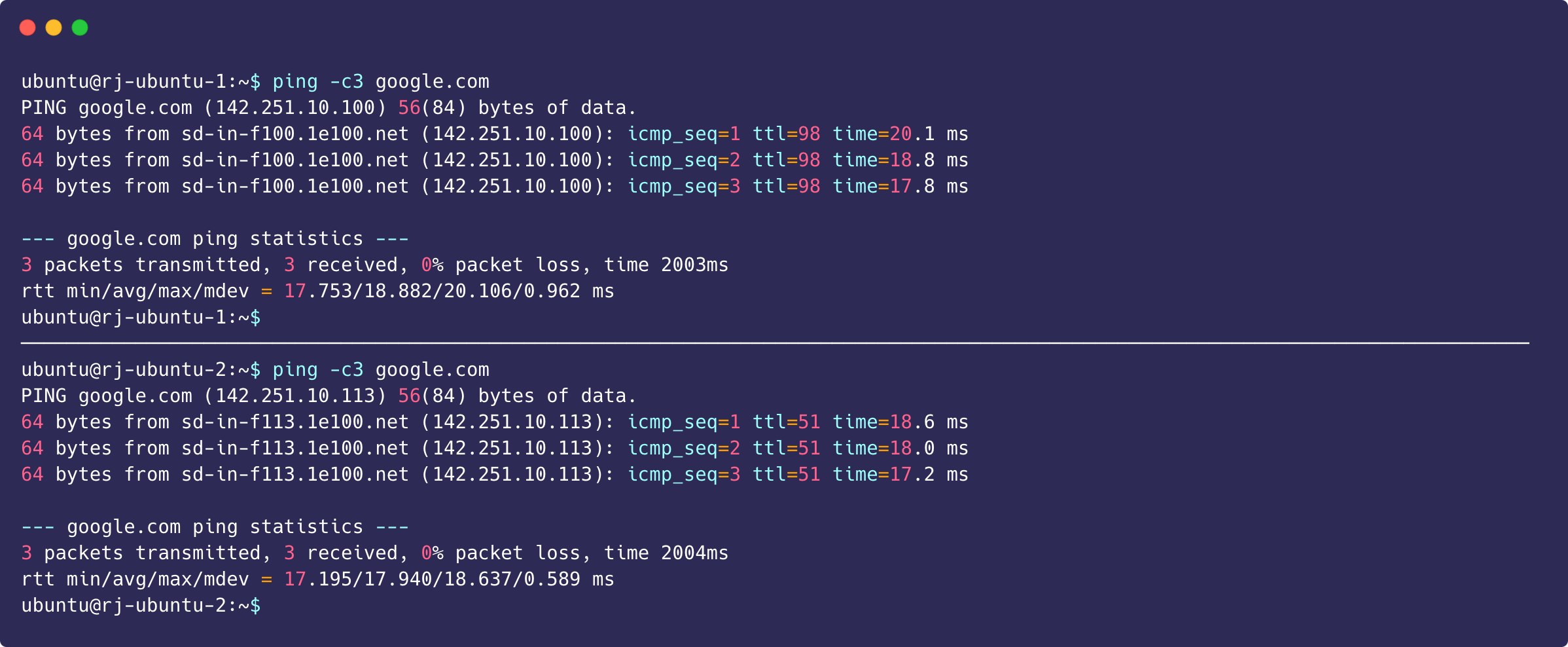

Login and check that the VM has receive a proper address and connectivity. MAke sure VMs can access the external network and communicate with each other.

virtctl console rj-ubuntu-1

virtctl console rj-ubuntu-2

Both VMs can successfully ping each other through the attached CIDR block, which proves that the attached network between the VMs allows for communication.

Pinging Google can successfully return packets, which proves that it is connected to the Internet.

Reference