Previously in Part 1, I mentioned that Kube-OVN is more powerful than Multus-CNI, but what makes it so?

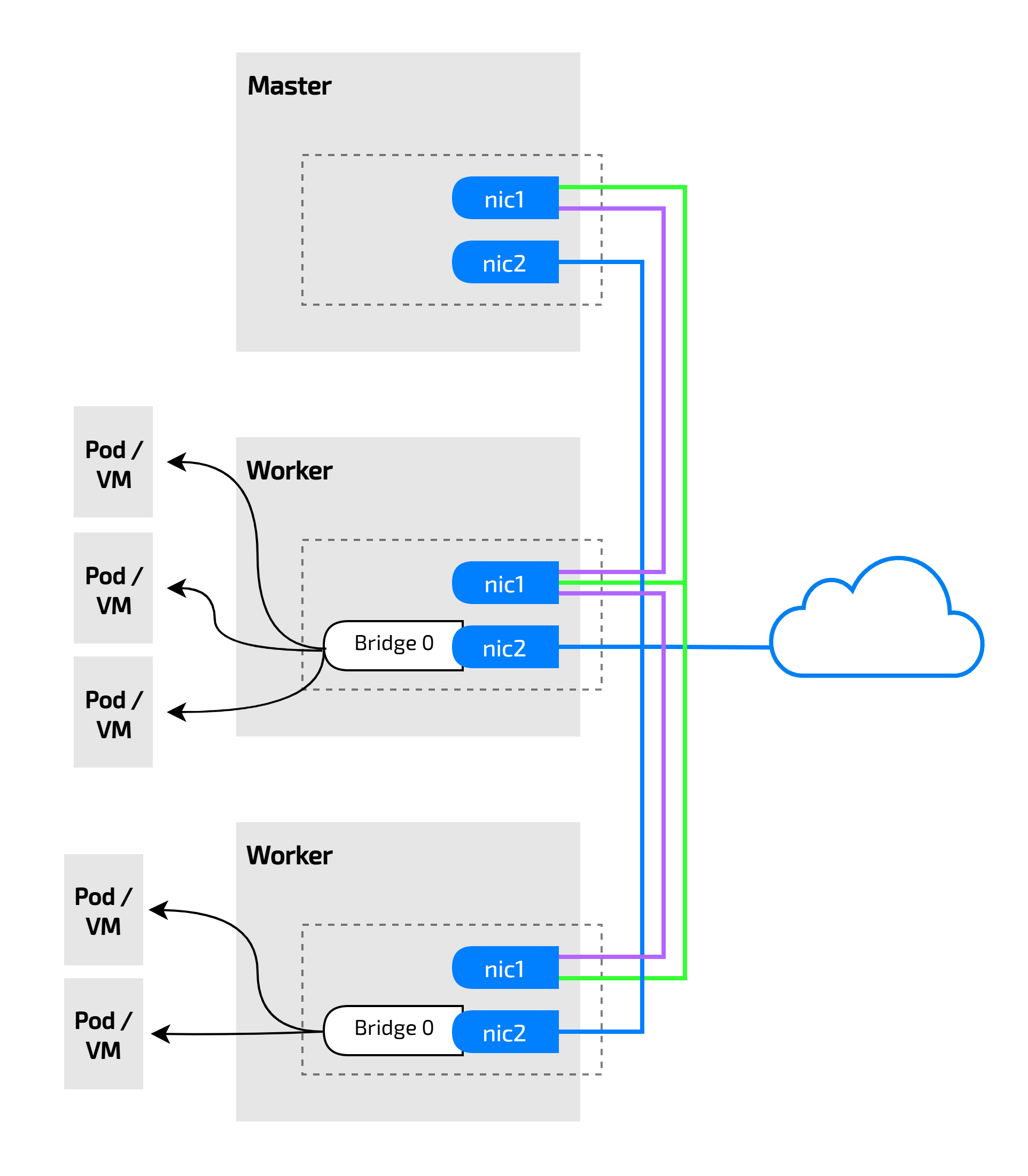

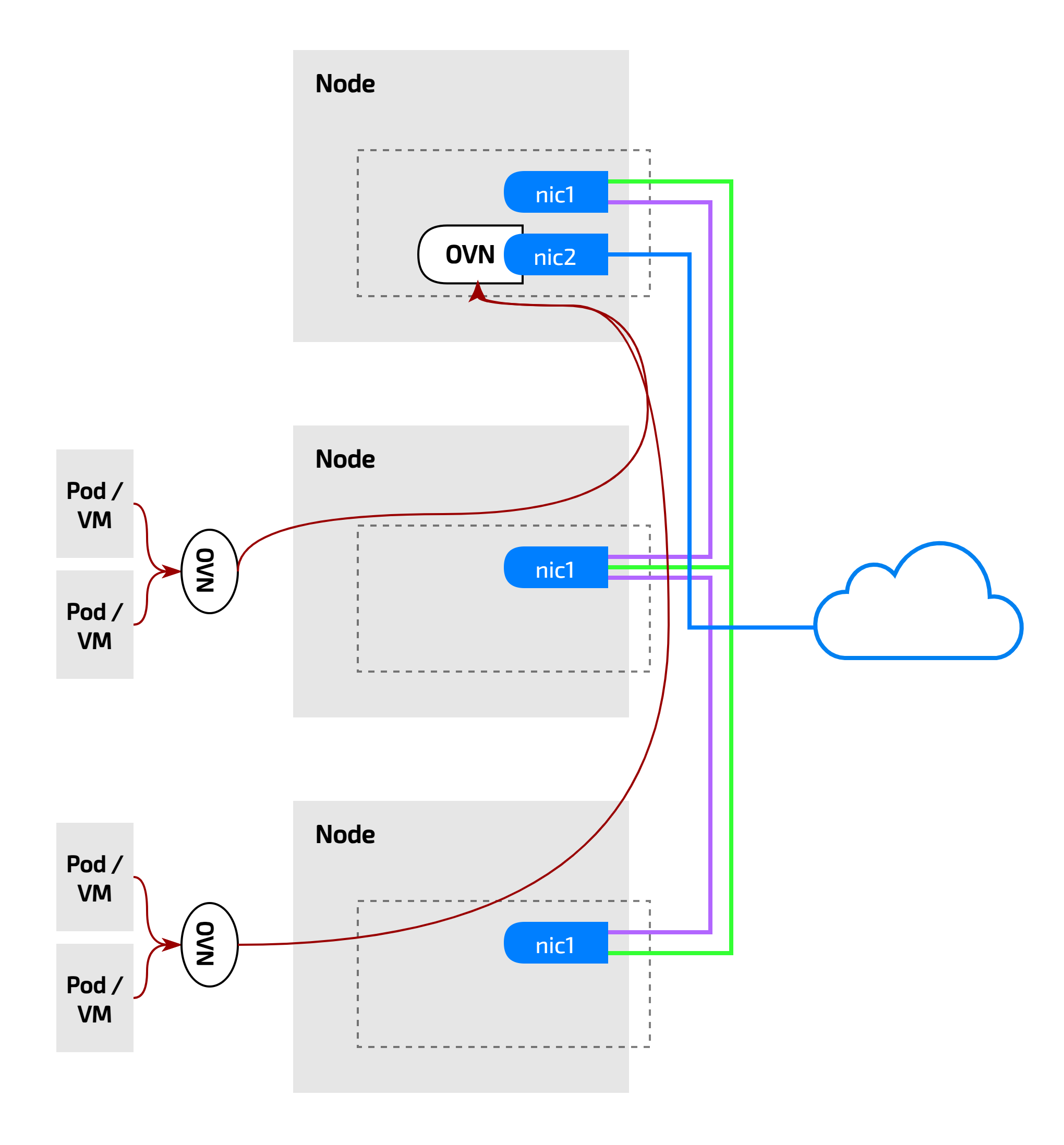

Having too many NICs can create a single point of failure (SPOF) in a worker or compute node. Maybe we can solve it by creating a bond interface from the two existing interfaces.

But it makes each node requires an additional NIC to connect to the outside network, which is extremely costly. If a typical VM orchestration setup requires 1 NIC per worker node for external network access, this new setup would require 2 NICs per node. So, if you have 50 worker nodes, you'd need 100 NICs, compared to just 50 NICs in the traditional setup. This doubles the hardware requirement.

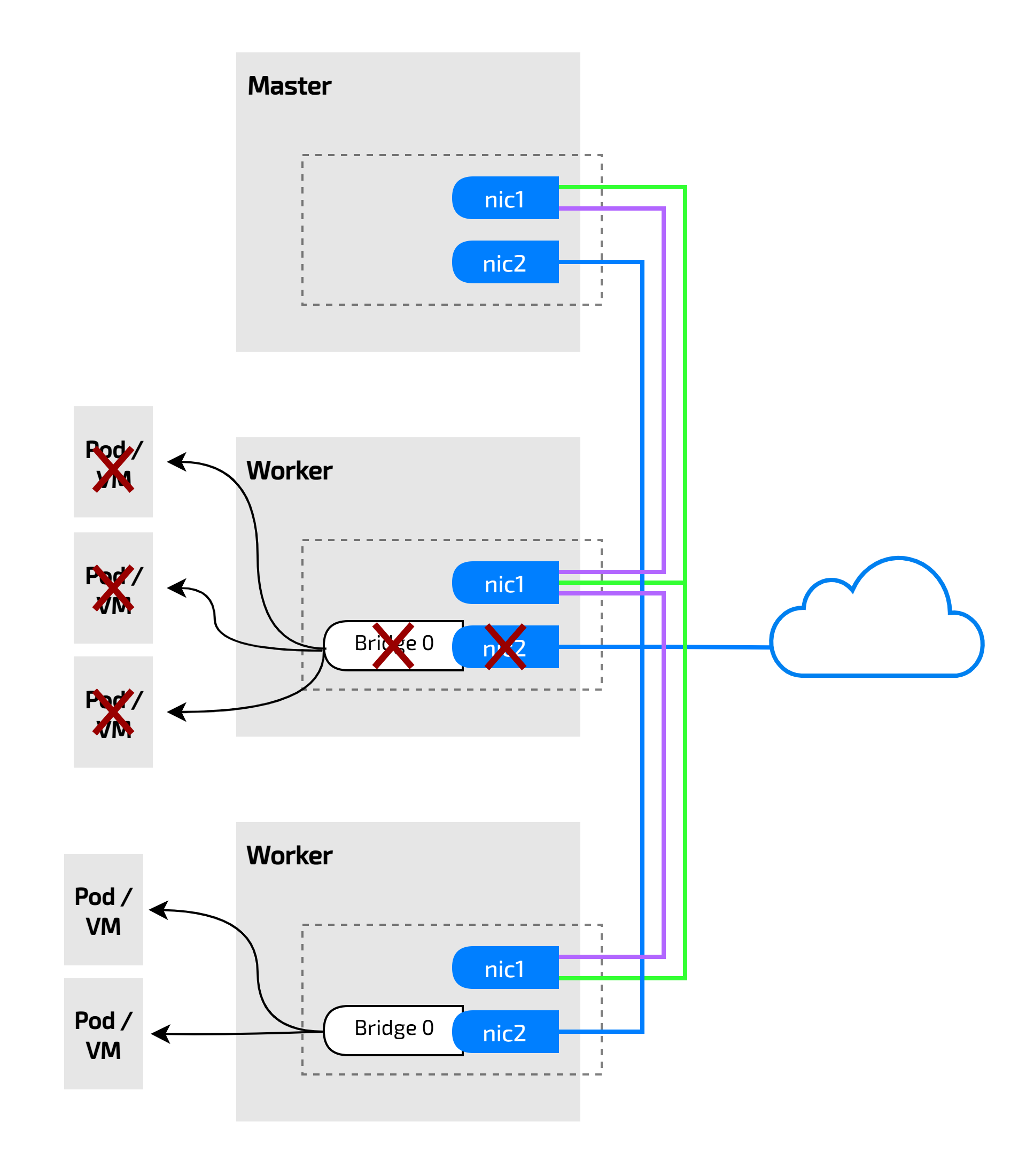

Worse, if a single NIC fails, it renders all the pods or VMs on that node inaccessible.

That issue can be resolved with SDN solutions like OVN.

Create OVN External Gateway

Create ConfigMap ovn-external-gw-config in kube-system Namespace:

apiVersion: v1

kind: ConfigMap

metadata:

name: ovn-external-gw-config

namespace: kube-system

data:

type: "centralized"

enable-external-gw: "true"

external-gw-nodes: "jah-k3s-h100-001,jah-k3s-h100-002,jah-k3s-h100-003"

external-gw-nic: "enp8s0"

external-gw-addr: "10.4.19.1/24"

nic-ip: "10.4.19.254/24"

nic-mac: "16:52:f3:13:6a:25"- enable-external-gw: Whether to enable SNAT and EIP functions.

- type: centrailized or distributed, Default is centralized If distributed is used, all nodes of the cluster need to have the same name NIC to perform the gateway function.

- external-gw-nodes: In centralized mode,The names of the node performing the gateway role, comma separated.

- external-gw-nic: The name of the NIC that performs the role of a gateway on the node.

- external-gw-addr: The IP and mask of the physical network gateway.

- nic-ip,nic-mac: The IP and Mac assigned to the logical gateway port needs to be an unoccupied IP and Mac for the physical subnet.

The type is centralized because i want to use one or more nodes as an external gateway, if you want to use all nodes as an external gateway you can choose distributed, and for this case, i only use 3 node which is on parameter external-gw-nodes and jah-k3s-h100-001, jah-k3s-h100-002, jah-k3s-h100-003 will become an external gateway, next is enable-external-gw set in true for enabling eip/fip function and the last is nic-ip is for a bridge between my physical network with ovn network.

Confirm the Configuration Take Effect

Check the OVN-NB status to confirm that the ovn-external logical switch exists and that the correct address and chassis are bound to the ovn-cluster-ovn-external logical router port.

root@jah-k3s-ctrl-001:~# kubectl ko nbctl show

switch 967436fa-c091-4340-b440-9ae33b3c98cc (external)

port localnet.external

type: localnet

addresses: ["unknown"]

port external-ovn-cluster

type: router

router-port: ovn-cluster-external

router 862b5126-73ae-4173-bf53-1a1350fdfebb (ovn-cluster)

port ovn-cluster-external

mac: "16:52:f3:13:6a:25"

networks: ["10.4.19.254/24"]

gateway chassis: [f4af51d5-595d-4138-9e6d-410a707a6da9 06c2cd08-4c5e-459d-8b80-8ddf0b98153a 1e49fb9a-aa0d-4077-b953-ed21d8499df1]

port ovn-cluster-ovn-default

mac: "3e:68:47:12:a1:41"

networks: ["10.253.0.1/16"]

port ovn-cluster-join

mac: "42:25:27:76:f6:20"

networks: ["100.64.0.1/17"]

nat 8795fcb2-9a12-418c-8db7-2608d76f8e61

external ip: "10.4.19.101"

logical ip: "10.253.0.83"

type: "dnat_and_snat"Check the OVS status to confirm that the corresponding NIC is bridged into the br-external bridge:

root@jah-k3s-ctrl-001:~# kubectl ko vsctl jah-k3s-h100-001 show

29613471-5654-4e0b-8e61-b48f6648824a

Bridge br-external

Port enp8s0

Interface enp8s0

Port patch-localnet.external-to-br-int

Interface patch-localnet.external-to-br-int

type: patch

options: {peer=patch-br-int-to-localnet.external}

Port br-external

Interface br-external

type: internal

Bridge br-int

fail_mode: secure

datapath_type: system

Port br-int

Interface br-int

type: internal

....................

ovs_version: "3.1.6"

From OVN's perspective, the ovn-cluster-external port is created with the same IP and MAC address specified in our ConfigMap. Can we test pinging it from outside the network?

===============================Outside=============================== ====================================Worker==============================================

root@raja-nfs:~/kubevirt-vm/ovn-ext-gw# ping -c3 10.4.19.254 │ root@jah-k3s-h100-001:~# tcpdump -i enp8s0 icmp

PING 10.4.19.254 (10.4.19.254) 56(84) bytes of data. │ tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

64 bytes from 10.4.19.254: icmp_seq=1 ttl=254 time=0.436 ms │ listening on enp8s0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

64 bytes from 10.4.19.254: icmp_seq=2 ttl=254 time=0.399 ms │ 09:13:14.393120 IP 10.4.19.29 > 10.4.19.254: ICMP echo request, id 82, seq 1, length 64

64 bytes from 10.4.19.254: icmp_seq=3 ttl=254 time=0.470 ms │ 09:13:14.393401 IP 10.4.19.254 > 10.4.19.29: ICMP echo reply, id 82, seq 1, length 64

│ 09:13:15.421829 IP 10.4.19.29 > 10.4.19.254: ICMP echo request, id 82, seq 2, length 64

--- 10.4.19.254 ping statistics --- │ 09:13:15.422061 IP 10.4.19.254 > 10.4.19.29: ICMP echo reply, id 82, seq 2, length 64

3 packets transmitted, 3 received, 0% packet loss, time 2053ms │ 09:13:16.445761 IP 10.4.19.29 > 10.4.19.254: ICMP echo request, id 82, seq 3, length 64

rtt min/avg/max/mdev = 0.399/0.435/0.470/0.029 ms │ 09:13:16.446085 IP 10.4.19.254 > 10.4.19.29: ICMP echo reply, id 82, seq 3, length 64

Great! The IP is reachable and can be pinged from outside.

Operational Test Create VM

Let's create kubevirt VM

# vm.yaml

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: testing

spec:

runStrategy: Always

template:

metadata:

labels:

kubevirt.io/size: small

kubevirt.io/domain: testing

spec:

domain:

cpu:

cores: 2

devices:

disks:

- name: disk0

disk:

bus: virtio

- name: cloudinit

cdrom:

bus: sata

readonly: true

rng: {}

interfaces:

- name: default

masquerade: {}

resources:

requests:

memory: 1024Mi

terminationGracePeriodSeconds: 15

networks:

- name: default

pod: {}

volumes:

- name: disk0

dataVolume:

name: testing

- name: cloudinit

cloudInitNoCloud:

userData: |-

#cloud-config

chpasswd:

list: |

ubuntu:ubuntu

expire: False

disable_root: false

ssh_pwauth: True

ssh_authorized_keys:

- ssh-rsa AAAA...

dataVolumeTemplates:

- metadata:

name: testing

spec:

storage:

storageClassName: csi-rbd-sc

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

source:

registry:

url: docker://quay.io/containerdisks/ubuntu:22.04kubectl get vmi,vm testing

NAME AGE PHASE IP NODENAME READY

virtualmachineinstance.kubevirt.io/testing 1h Running 10.253.0.83 jah-k3s-h100-003 True

NAME AGE STATUS READY

virtualmachine.kubevirt.io/testing 1h Running True

Attach EIP

The EIP or SNAT rules configured by the Pod can be dynamically adjusted via kubectl or other tools, remember to remove the ovn.kubernetes.io/routed annotation to trigger the routing change.

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

virt-launcher-testing-4vmxf 2/2 Running 0 16h 10.253.0.83 jah-k3s-h100-003 <none> 1/1

kubectl annotate pod virt-launcher-testing-4vmxf ovn.kubernetes.io/eip=10.4.19.101 --overwrite

kubectl annotate pod virt-launcher-testing-4vmxf ovn.kubernetes.io/routed-When the EIP or SNAT takes into effect, the ovn.kubernetes.io/routed annotation will be added back.

Recheck from OVN Perspective

root@jah-k3s-ctrl-001:~# kubectl ko nbctl show

switch f6adab24-f39f-4d34-9226-0d38f9375331 (ovn-default)

.........................

switch 967436fa-c091-4340-b440-9ae33b3c98cc (external)

port localnet.external

type: localnet

addresses: ["unknown"]

port external-ovn-cluster

type: router

router-port: ovn-cluster-external

router 862b5126-73ae-4173-bf53-1a1350fdfebb (ovn-cluster)

port ovn-cluster-external

mac: "16:52:f3:13:6a:25"

networks: ["10.4.19.254/24"]

gateway chassis: [f4af51d5-595d-4138-9e6d-410a707a6da9 06c2cd08-4c5e-459d-8b80-8ddf0b98153a 1e49fb9a-aa0d-4077-b953-ed21d8499df1]

port ovn-cluster-ovn-default

mac: "3e:68:47:12:a1:41"

networks: ["10.253.0.1/16"]

port ovn-cluster-join

mac: "42:25:27:76:f6:20"

networks: ["100.64.0.1/17"]

nat 8795fcb2-9a12-418c-8db7-2608d76f8e61

external ip: "10.4.19.101"

logical ip: "10.253.0.83"

type: "dnat_and_snat"root@jah-k3s-ctrl-001:~# kubectl ko nbctl lr-nat-list ovn-cluster

TYPE GATEWAY_PORT EXTERNAL_IP EXTERNAL_PORT LOGICAL_IP EXTERNAL_MAC LOGICAL_PORT

dnat_and_snat 10.4.19.101 10.253.0.83

OVN is working perfectly and has created the FIP/EIP port—time to take it for a test drive!

============================================VM======================================================== ============================================Outside=================================================

user@sandbox:~/k3s$ virtctl console testing │root@raja-nfs:~# ping -c3 10.4.19.101

Successfully connected to testing console. The escape sequence is ^] │PING 10.4.19.101 (10.4.19.101) 56(84) bytes of data.

│64 bytes from 10.4.19.101: icmp_seq=1 ttl=62 time=2.55 ms

ubuntu@testing:~$ ping -c3 10.4.19.1 │64 bytes from 10.4.19.101: icmp_seq=2 ttl=62 time=0.965 ms

PING 10.4.19.1 (10.4.19.1) 56(84) bytes of data. │64 bytes from 10.4.19.101: icmp_seq=3 ttl=62 time=0.854 ms

64 bytes from 10.4.19.1: icmp_seq=1 ttl=62 time=1.74 ms │

64 bytes from 10.4.19.1: icmp_seq=2 ttl=62 time=0.853 ms │--- 10.4.19.101 ping statistics ---

64 bytes from 10.4.19.1: icmp_seq=3 ttl=62 time=0.478 ms │3 packets transmitted, 3 received, 0% packet loss, time 2003ms

│rtt min/avg/max/mdev = 0.854/1.457/2.553/0.776 ms

--- 10.4.19.1 ping statistics --- │root@raja-nfs:~#

3 packets transmitted, 3 received, 0% packet loss, time 2003ms │root@raja-nfs:~# ssh [email protected] hostname

rtt min/avg/max/mdev = 0.478/1.024/1.742/0.530 ms │testing

ubuntu@testing:~$ │root@raja-nfs:~# ssh [email protected] whoami

ubuntu@testing:~$ ip a │ubuntu

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 │root@raja-nfs:~# ssh [email protected] ip a

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 │1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

inet 127.0.0.1/8 scope host lo │ link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

valid_lft forever preferred_lft forever │ inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host │ valid_lft forever preferred_lft forever

valid_lft forever preferred_lft forever │ inet6 ::1/128 scope host

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8900 qdisc fq_codel state UP group default qlen 1000 │ valid_lft forever preferred_lft forever

link/ether fe:71:36:c8:d0:04 brd ff:ff:ff:ff:ff:ff │2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8900 qdisc fq_codel state UP group default qlen 1000

inet 10.0.2.2/24 metric 100 brd 10.0.2.255 scope global dynamic enp1s0 │ link/ether fe:71:36:c8:d0:04 brd ff:ff:ff:ff:ff:ff

valid_lft 86254361sec preferred_lft 86254361sec │ inet 10.0.2.2/24 metric 100 brd 10.0.2.255 scope global dynamic enp1s0

inet6 fe80::fc71:36ff:fec8:d004/64 scope link │ valid_lft 86254426sec preferred_lft 86254426sec

valid_lft forever preferred_lft forever │ inet6 fe80::fc71:36ff:fec8:d004/64 scope link

ubuntu@testing:~$ │ valid_lft forever preferred_lft forever

Nice! The EIP/FIP functions just like they do in other VM orchestration platforms.

Deep Dive

We can verify if the IP is pingable from both the physical network and the OVN network. But now the real question is: where is that IP actually attached? And when and where does the IP translation occur?

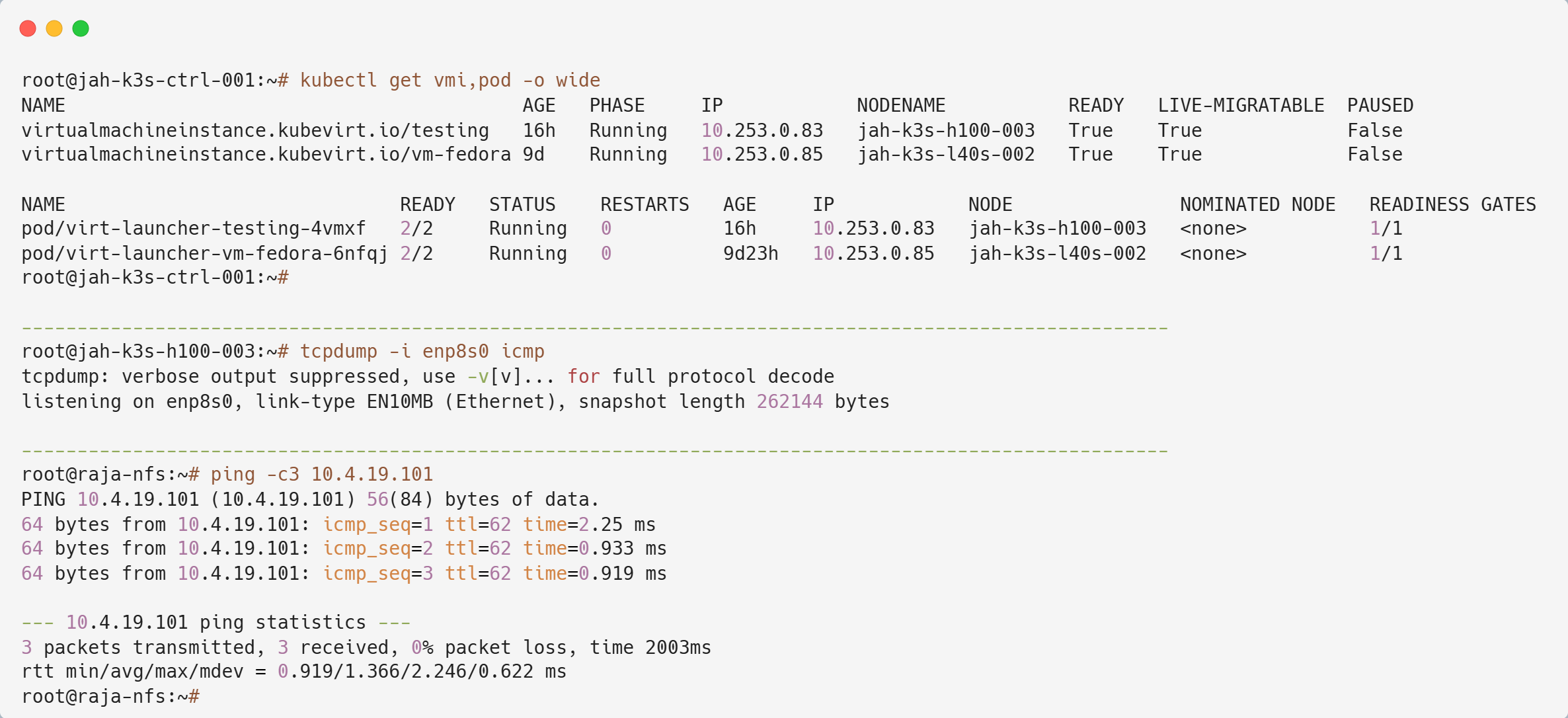

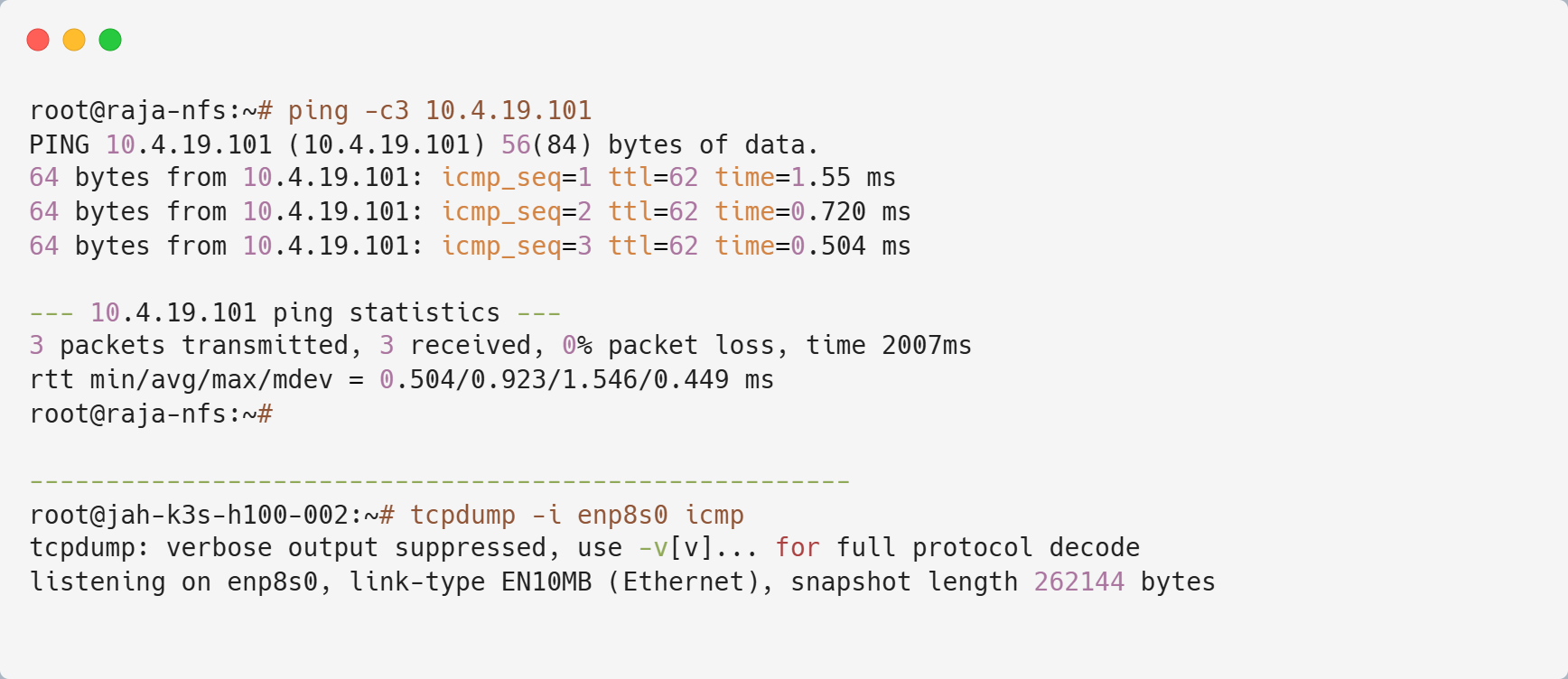

Let’s begin by sniffing the interface on jah-k3s-h100-003, since that’s where the pod and VM are located.

From the terminal output, we can observe that the secondary NIC did not capture any ICMP traffic, even though the pod and VM are running there. Now, let’s switch to jah-k3s-h100-001.

There we have it—traffic flows into jah-k3s-h100-001 and then routes out to jah-k3s-h100-003. This highlights a key advantage of OVN: it can designate specific nodes as external gateways, eliminating the need for every node to have identical interfaces.

With OVN, only a few nodes require a secondary NIC to act as external gateway nodes, making it more efficient. This is a great example of how OVN operates seamlessly without needing additional tools for bridging, tunneling, routing, or NAT-ing.

pod -> node ovn -> centralized ovn -> destination node ovn -> destination pod

Failover Test

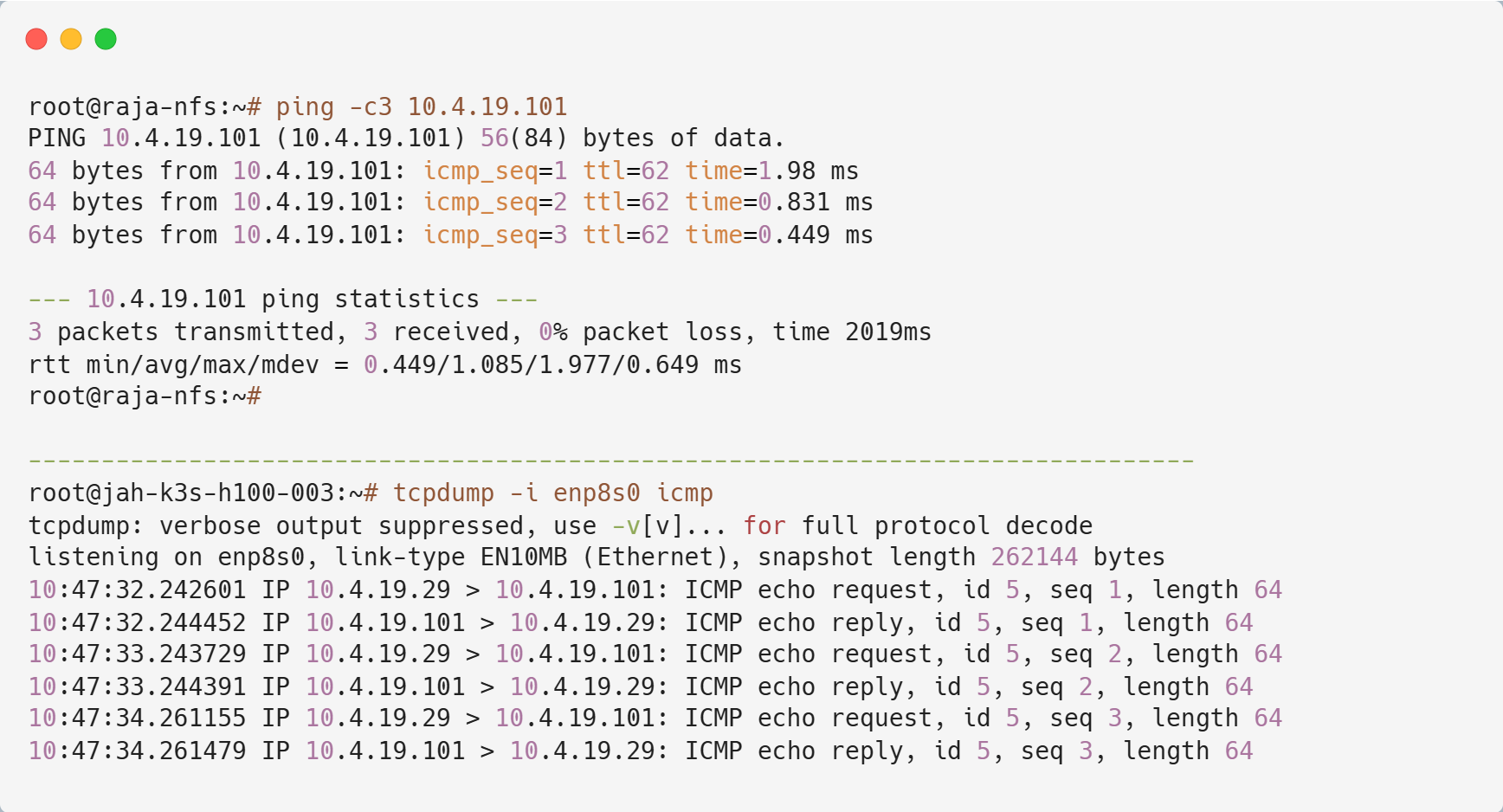

I chose jah-k3s-h100-001, jah-k3s-h100-002, and jah-k3s-h100-003 as "centralized" nodes, meaning all NATing, ingress, and egress processes happen there. Earlier, when I tested pinging the VM, all traffic was routed through jah-k3s-h100-001, even though the pod and VM were running on jah-k3s-h100-003.

So, let’s begin by shutting down jah-k3s-h100-001. What do you think will happen?

kubectl get node

NAME STATUS ROLES AGE VERSION

jah-k3s-ctrl-001 Ready control-plane,etcd,master 19d v1.28.11+k3s1

jah-k3s-ctrl-002 Ready control-plane,etcd,master 19d v1.28.11+k3s1

jah-k3s-ctrl-003 Ready control-plane,etcd,master 19d v1.28.11+k3s1

jah-k3s-h100-001 NotReady,SchedulingDisabled worker 19d v1.28.11+k3s1

jah-k3s-h100-002 Ready worker 19d v1.28.11+k3s1

jah-k3s-h100-003 Ready worker 19d v1.28.11+k3s1

jah-k3s-halb-001 Ready infra,worker 19d v1.28.11+k3s1

jah-k3s-halb-002 Ready infra,worker 19d v1.28.11+k3s1

jah-k3s-halb-003 Ready infra,worker 19d v1.28.11+k3s1

jah-k3s-l40s-001 Ready worker 19d v1.28.11+k3s1

jah-k3s-l40s-002 Ready worker 19d v1.28.11+k3s1

jah-k3s-l40s-003 Ready worker 19d v1.28.11+k3s1

jah-k3s-prmt-001 Ready logging,metrics,monitoring,worker 19d v1.28.11+k3s1

jah-k3s-prmt-002 Ready logging,metrics,monitoring,worker 19d v1.28.11+k3s1

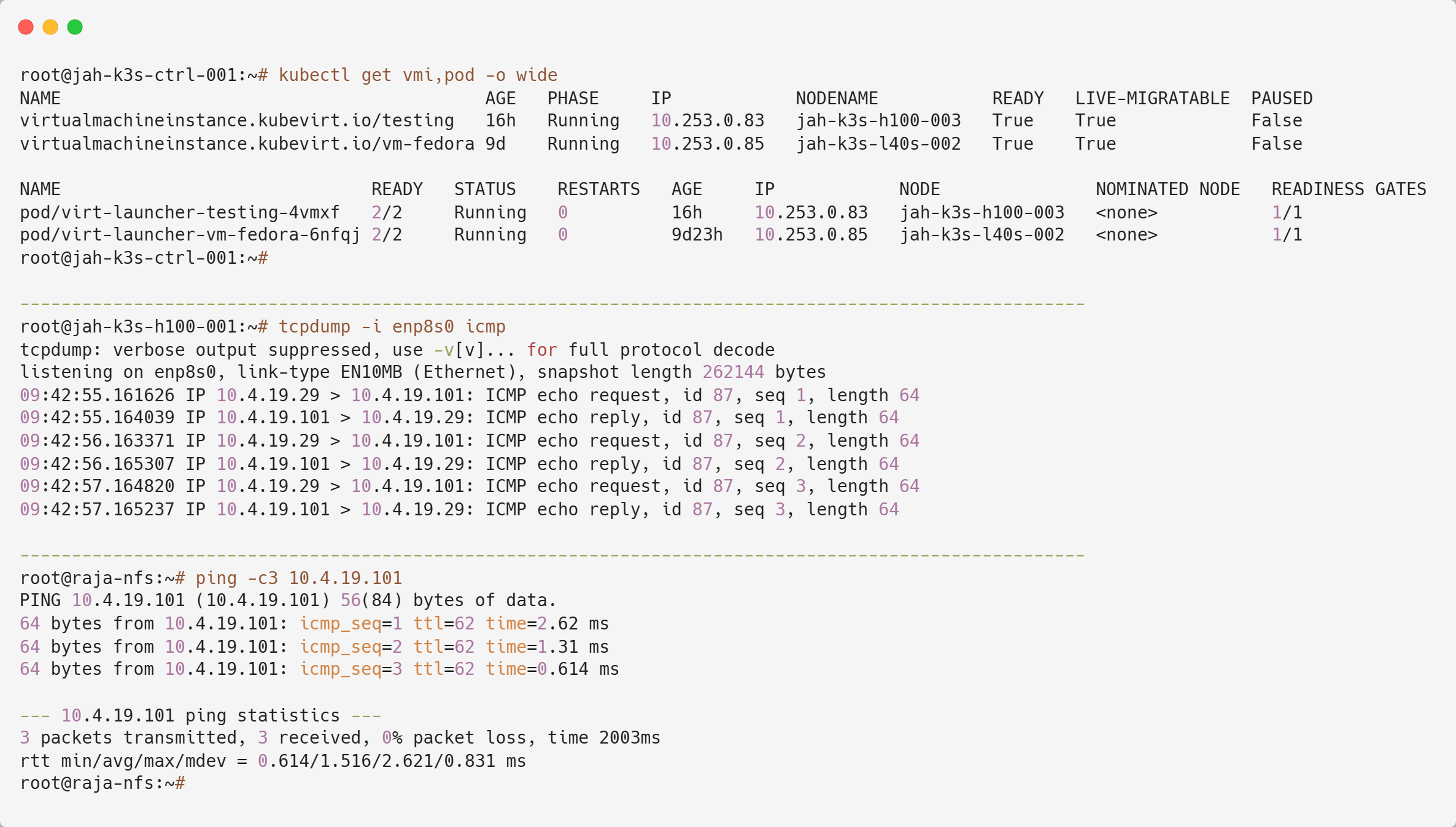

jah-k3s-prmt-003 Ready logging,metrics,monitoring,worker 19d v1.28.11+k3s1Let's begin by sniffing the interface on jah-k3s-h100-002.

From the terminal output, we can observe that the secondary NIC hasn't captured any ICMP traffic.

Now, let's move on to jah-k3s-h100-003.

There we go, now all the traffic is directed towards jah-k3s-h100-003.

Impressions from using Kubevirt

One of the advantages of KubeVirt is that you can set up a VM on a container network. KubeVirt and OVN have significantly altered the behavior of VM orchestration. The most notable change is that they have shifted cloud-init from a network web service to a CD-ROM disk, completely removing the need for a DHCP server. From a functionality perspective, especially in terms of networking, KubeVirt+OVN can offer nearly the same capabilities as other VM orchestration tools like OpenStack, Proxmox, and VMware.

KubeVirt technology addresses the needs of development teams that have adopted or want to adopt Kubernetes but possess existing Virtual Machine-based workloads that cannot be easily containerized. KubeVirt isn't intended to replace traditional VM orchestration tools just yet. For now, it's more about integrating VMs into Kubernetes environments.

Perhaps some companies will take this concept seriously and build VM orchestration on top of Kubernetes using modified versions of KubeVirt and Kube-OVN in the future.

Reference