By default, Kubevirt VM only connected to the container network and inaccesable from outside. We can access our Kubevirt VMs from the outside by creating a Kubernetes Service of type LoadBalancer. By ensuring the selector in the Service matches the VM's domain label, and define the desired ports for external access. Once the Service is created, the LoadBalancer will assign an external IP that you can use to access the VM from outside the cluster.

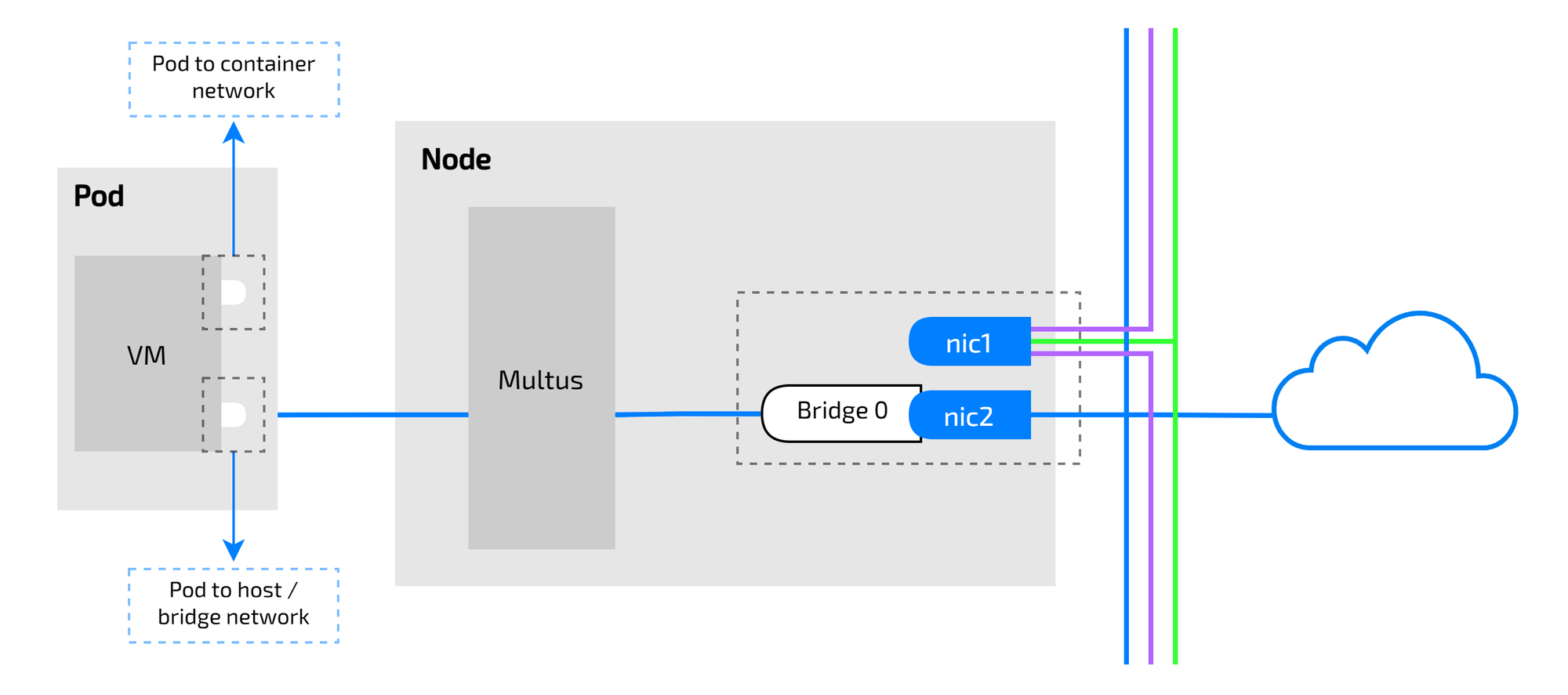

This time, I want to connect the VM not only to the container network but also to the host network, so we use the Meta CNI Plugin Multus. Multus allows you to attach multiple NICs to a container. KubeVirt natively supports multus and can attach multiple NICs to VMs as well.

Configure Bridge on Worker Host

First, on all worker nodes, create a bridge called br0.

root@jah-k3s-h100-001:~# ip link show enp8s0

4: enp8s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master br0 state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:f4:cd:0a brd ff:ff:ff:ff:ff:ff# /etc/netplan/54-static-tenant.yaml

network:

version: 2

ethernets:

enp8s0:

dhcp4: false

dhcp6: false

bridges:

br0:

interfaces: [enp8s0]

addresses: [10.4.19.41/24]

routes:

- to: 10.4.19.0/24

via: 10.4.19.1

nameservers:

addresses: [10.4.19.1, 8.8.8.8]

parameters:

stp: false

dhcp4: false

dhcp6: falsesudo netplan try

sudo netplan applySo in here i have enp8s0 as my secondary network and then i create a bridge called br0 and the last is add the enp8s0 interface into br0.

root@jah-k3s-h100-001:~# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.1aa33bae806b no enp8s0Create NetworkAttachmentDefinition

Apply Multus based on br0. The corresponding NetworkAttachmentDefinition will also apply.

# nad.yaml

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: underlay-bridge

spec:

config: |

{

"cniVersion": "0.3.1",

"name": "underlay-bridge",

"type": "bridge",

"bridge": "br0",

"ipam": {

"type": "host-local",

"subnet": "10.4.19.0/24"

}

}kubectl apply -f nad.yaml

kubectl get net-attach-def

NAME AGE

underlay-bridge 5m

Create Sample Pod

As an example, add the following annotation to an appropriate nginx Pod and apply.

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx

name: nginx

annotations:

k8s.v1.cni.cncf.io/networks: |

[

{

"name": "underlay-bridge",

"interface": "eth1",

"ips": [ "10.4.19.171" ]

}

]

spec:

containers:

- image: nginx

name: nginx

ports:

- containerPort: 80

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}When we enter the nginx Pod and look at the IP address, it looks like this.

$ kubectl exec -it nginx -- /bin/bash

root@nginx:/# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth1@if29: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 7e:cf:c3:79:03:fc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.4.19.171/24 brd 10.4.19.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::7ccf:c3ff:fe79:3fc/64 scope link

valid_lft forever preferred_lft forever

27: eth0@if28: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8900 qdisc noqueue state UP group default

link/ether f6:bd:38:55:39:4d brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.253.0.28/16 brd 10.253.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::f4bd:38ff:fe55:394d/64 scope link

valid_lft forever preferred_lft forever

eth0@if28(10.253.0.28) is from a regular container network. In addition to that, there is a NIC called eth1@if29(10.4.19.171). This will be the host network's. The same can be done with VMs.

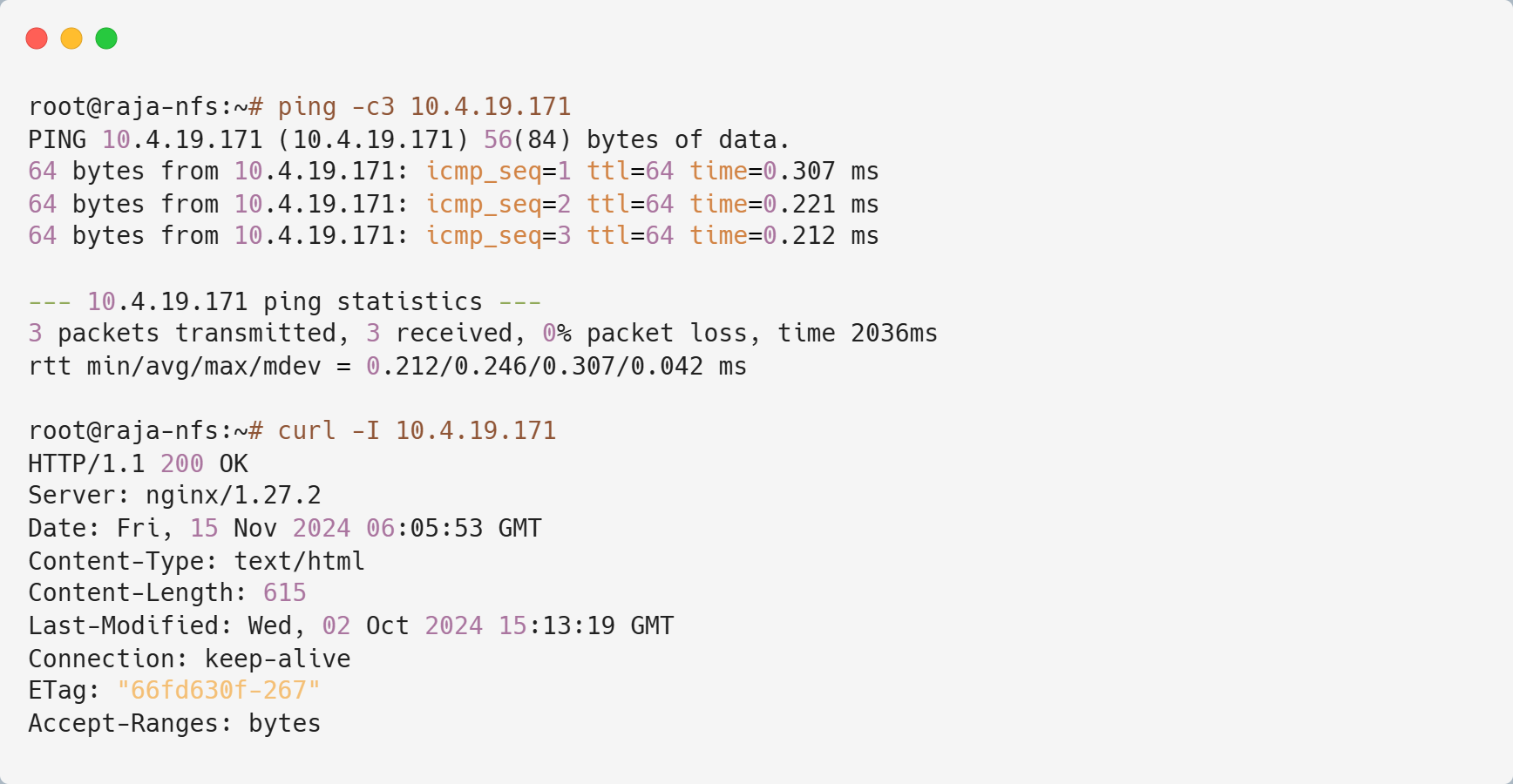

Operational Test from outside K8s Cluster.

Create Sample VMs

Apply the manifest that defines the VM from here.

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: testing

spec:

runStrategy: Always

template:

metadata:

annotations:

kubevirt.io/allow-pod-bridge-network-live-migration: ""

labels:

kubevirt.io/size: small

kubevirt.io/domain: testing

spec:

domain:

cpu:

cores: 2

devices:

disks:

- name: disk0

disk:

bus: virtio

- name: cloudinit

cdrom:

bus: sata

readonly: true

rng: {}

interfaces:

- name: default

masquerade: {}

- name: underlay

bridge: {}

resources:

requests:

memory: 1024Mi

terminationGracePeriodSeconds: 15

networks:

- name: default

pod: {}

- name: underlay

multus:

networkName: underlay-bridge

volumes:

- name: disk0

dataVolume:

name: testing

- name: cloudinit

cloudInitNoCloud:

userData: |-

#cloud-config

chpasswd:

list: |

ubuntu:ubuntu

expire: False

disable_root: false

ssh_pwauth: True

ssh_authorized_keys:

- ssh-rsa AAAA...

networkData: |

version: 2

ethernets:

enp1s0:

dhcp4: true

enp2s0:

dhcp4: false

addresses: [10.4.19.172/24]

routes:

- to: 10.4.19.0/24

via: 10.4.19.1

dataVolumeTemplates:

- metadata:

name: testing

spec:

storage:

storageClassName: csi-rbd-sc

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

source:

registry:

url: docker://quay.io/containerdisks/ubuntu:22.04As explained earlier, in addition to the regular container network, it also connects to the host network using Multus. In addition to writing manifest, we also configure settings using CloudInit.

interfaces:

- name: default

masquerade: {}

- name: underlay

bridge: {}

networks:

- name: default

pod: {}

- name: underlay

multus:

networkName: underlay-bridge

networkData: |

version: 2

ethernets:

enp1s0:

dhcp4: true

enp2s0:

dhcp4: false

addresses: [10.4.19.172/24]

routes:

- to: 10.4.19.0/24

via: 10.4.19.1

The first default enp1s0 is the container network. It connects to the container network through a pod called virt-launcher, which is created when the VM runs. In masquarade mode, the VM is the same network as virt-launcher (separate from the container network and defaults to) 10.0.2.0/24. The VM receives the IP address from the virt-launcher via DHCP. Communication between the VM and the container network is achieved by NAT-ing the virt-launcher. If you do not set it to masquarade mode here, it seems that some CNI Plugins may not be able to communicate properly, so there is a setting that forces masquarade mode It is permitBridgeInterfaceOnPodNetwork: false.

Second Underlay enp2s0 is the host network. Associate it with the NetworkAttachmentDefinition we created earlier. Addresses are determined statically using CloudInit.

Startup VM

kubectl get vm,vmi testing

NAME AGE STATUS READY

virtualmachine.kubevirt.io/testing 125m Running True

NAME AGE PHASE IP NODENAME READY

virtualmachineinstance.kubevirt.io/testing 124m Running 10.253.0.69 jah-k3s-h100-003 TrueLook inside the VM

virtctl console testing

---

ubuntu@testing:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8900 qdisc fq_codel state UP group default qlen 1000

link/ether 6a:32:0b:08:45:79 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.2/24 metric 100 brd 10.0.2.255 scope global dynamic enp1s0

valid_lft 86305653sec preferred_lft 86305653sec

inet6 fe80::6832:bff:fe08:4579/64 scope link

valid_lft forever preferred_lft forever

3: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 76:b3:da:fa:2b:12 brd ff:ff:ff:ff:ff:ff

inet 10.4.19.172/24 brd 10.4.19.255 scope global enp2s0

valid_lft forever preferred_lft forever

inet6 fe80::74b3:daff:fefa:2b12/64 scope link

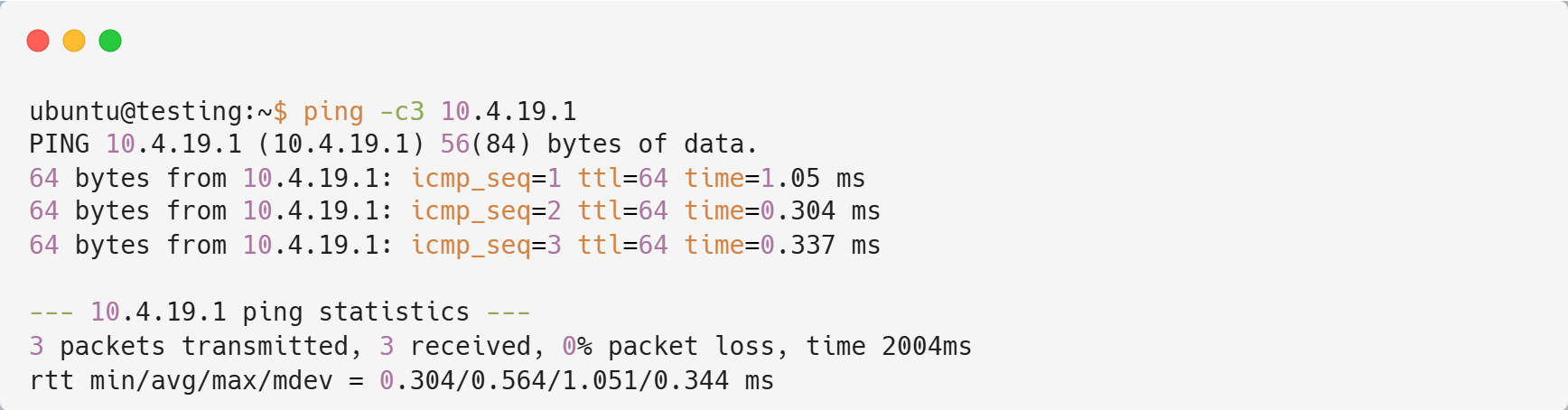

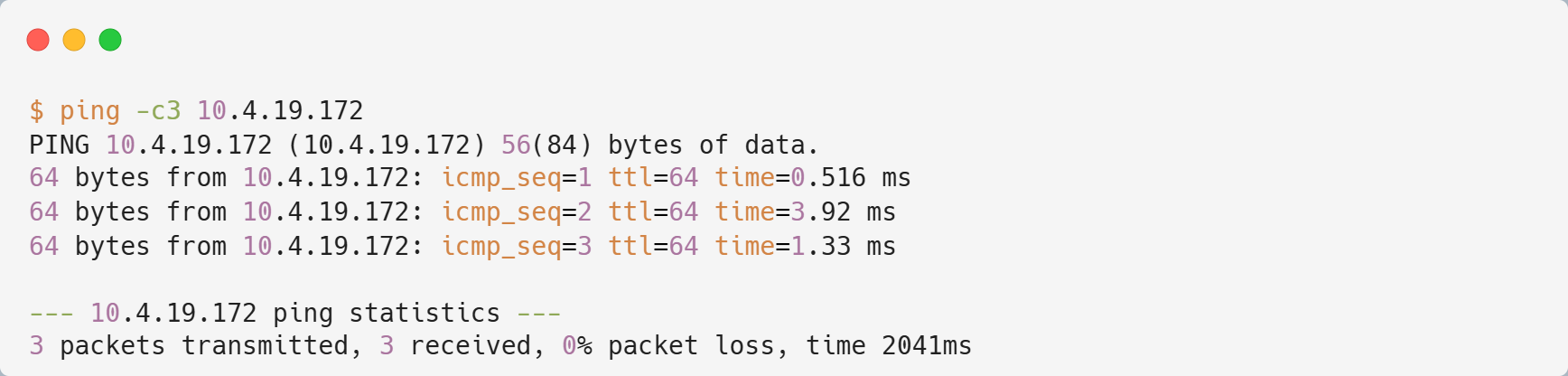

valid_lft forever preferred_lft foreverAs we can see, now the vm have two interfaces, enp1s0 and enp2s0. Let's try to ping gateway from VM

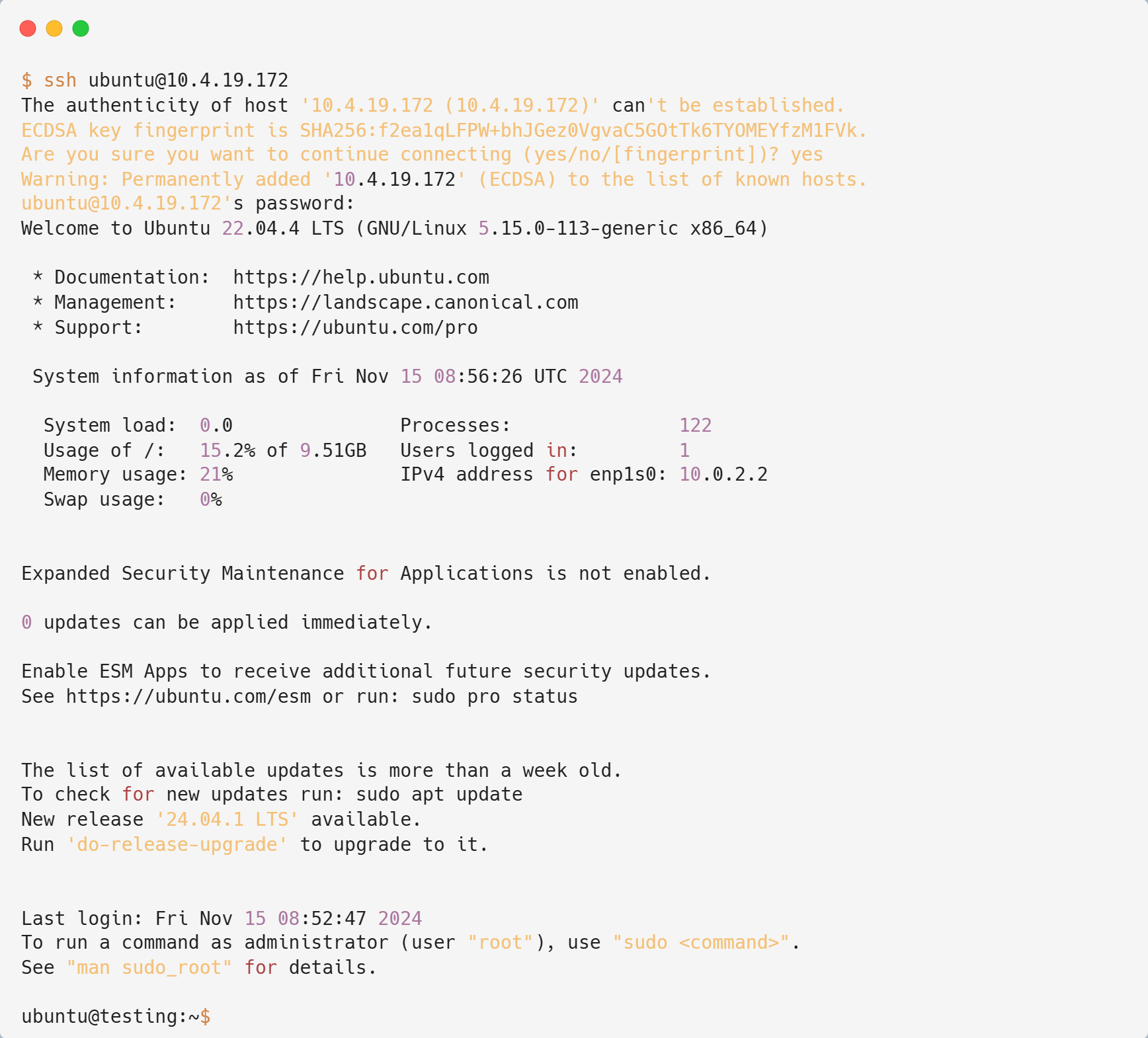

Now try to ssh from gateway / outside cluster

Voila!! Now the VM are accessible from outside kubernetes cluster. But how does it work?

Deep Dive the Rabbit Hole

First, Let's find out where the vm was deployed

kubectl get vmi testing

NAME AGE PHASE IP NODENAME READY

testing 138m Running 10.253.0.69 jah-k3s-h100-003 True

The vm was deployed on jah-k3s-h100-003 let check the bridge on there

root@jah-k3s-h100-003:~# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.461faffa57c2 no enp8s0

veth233692da

root@jah-k3s-h100-003:~# ip link show veth233692da

23: veth233692da@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP mode DEFAULT group default

link/ether 2e:d5:14:5a:ef:ad brd ff:ff:ff:ff:ff:ff link-netns cni-bcaaa826-60cf-3a58-f6ae-561d203ee174From the output, bridge br0 have two slave/connection, which is one is from my seconday nic (enp8s0) and another is (veth233692da), does it created from vm? let's dig deeper.

On ip link detail output showed link-netns cni-bcaaa826-60cf-3a58-f6ae-561d203ee174. Maybe we can check what is inside that namespace.

root@jah-k3s-h100-003:~# ip netns exec cni-bcaaa826-60cf-3a58-f6ae-561d203ee174 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: 9971e86c032-nic@if23: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master k6t-9971e86c032 state UP group default

link/ether aa:9d:38:41:e5:48 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::a89d:38ff:fe41:e548/64 scope link

valid_lft forever preferred_lft forever

3: k6t-eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8900 qdisc noqueue state UP group default qlen 1000

link/ether 02:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.1/24 brd 10.0.2.255 scope global k6t-eth0

valid_lft forever preferred_lft forever

inet6 fe80::ff:fe00:0/64 scope link

valid_lft forever preferred_lft forever

4: tap0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8900 qdisc fq_codel master k6t-eth0 state UP group default qlen 1000

link/ether 6e:55:cc:88:43:32 brd ff:ff:ff:ff:ff:ff

inet6 fe80::6c55:ccff:fe88:4332/64 scope link

valid_lft forever preferred_lft forever

5: k6t-9971e86c032: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether aa:9d:38:41:e5:48 brd ff:ff:ff:ff:ff:ff

inet 169.254.75.11/32 scope global k6t-9971e86c032

valid_lft forever preferred_lft forever

inet6 fe80::a89d:38ff:fe41:e548/64 scope link

valid_lft forever preferred_lft forever

6: tap9971e86c032: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master k6t-9971e86c032 state UP group default qlen 1000

link/ether de:f7:32:66:ac:df brd ff:ff:ff:ff:ff:ff

inet6 fe80::dcf7:32ff:fe66:acdf/64 scope link

valid_lft forever preferred_lft forever

7: pod9971e86c032: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 76:b3:da:fa:2b:12 brd ff:ff:ff:ff:ff:ff

inet 10.4.19.2/24 brd 10.4.19.255 scope global pod9971e86c032

valid_lft forever preferred_lft forever

inet6 fe80::74b3:daff:fefa:2b12/64 scope link

valid_lft forever preferred_lft forever

21: eth0@if22: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 8900 qdisc noqueue state UP group default

link/ether 6a:32:0b:08:45:79 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.253.0.69/16 brd 10.253.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::6832:bff:fe08:4579/64 scope link

valid_lft forever preferred_lft foreverI think it is a kubevirt namespace, so the pair nic veth233692da is 9971e86c032-nic.

If we see more detail, we can see that 9971e86c032-nic have master to k6t-9971e86c032. It means they have some relationship between them, maybe a bridge.

root@jah-k3s-h100-003:~# ip netns exec cni-bcaaa826-60cf-3a58-f6ae-561d203ee174 brctl show

bridge name bridge id STP enabled interfaces

k6t-9971e86c032 8000.aa9d3841e548 no 9971e86c032-nic

tap9971e86c032

k6t-eth0 8000.020000000000 no tap0

Yes it was a bridge interface, so the flow is: secondary interface -> bridge interface -> veethpeer -> bridge interface -> vm.

Awesome! Now that we’ve explored how KubeVirt works with Multus CNI, in the final part, I’ll dive into using Kube-OVN and uncover why it offers more capabilities than Multus CNI.