Introduction

Monorepos are becoming increasingly common, especially for teams managing multiple related services. While they offer many benefits, such as simplified dependency management and easier refactoring, but they can complicate CI/CD setups. Unlike multi-repo structures, where each service has its own pipeline, a monorepo requires smarter automation to avoid triggering builds for services that haven’t changed.

In this blog post, We'll through how we used GitHub Actions and folder-based filtering to solve this problem. By defining specific paths for each microservice, we can detect changes at the folder level and trigger targeted pipeline runs. This makes our CI/CD process both faster and more efficient.

In this blog post, I’m using a fork of the GoogleCloudPlatform/microservices-demo repository as the base project. You can check out my forked version here.

Create Github Actions

In your GitHub repository, create a folder named .github/workflows if it doesn't already exist. This is where all your workflow files will live. Inside .github/workflows, create a YAML file, e.g., micro-services.yaml. This file defines the automation logic.

name: Microservices Pipeline

on:

push:

branches: [ main ]

jobs:

## Detect Changes in Microservices

changes:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- uses: dorny/paths-filter@v3

id: filter

with:

filters: .github/filters.yaml

outputs:

# Expose matched filters as job 'packages' output variable

packages: ${{ steps.filter.outputs.changes }}

## Build & Push Microservices Images

build:

needs: changes

runs-on: ubuntu-latest

if: ${{ needs.changes.outputs.packages != '[]' }}

strategy:

fail-fast: false

matrix:

# Parse JSON array containing names of all filters matching any of changed files

service: ${{ fromJSON(needs.changes.outputs.packages) }}

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- uses: docker/setup-qemu-action@v3

- uses: docker/setup-buildx-action@v3

- name: Login to DockerHub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Build & Push ${{ matrix.service }}

uses: docker/build-push-action@v6

with:

context: src/${{ matrix.service }}

file: src/${{ matrix.service }}/Dockerfile

push: true

tags: |

${{ secrets.DOCKERHUB_USERNAME }}/microservices-demo:${{ matrix.service }}

${{ secrets.DOCKERHUB_USERNAME }}/microservices-demo:${{ matrix.service }}-${{ github.sha }}

## Deploy Microservices

deploy:

needs: [changes, build]

runs-on: ubuntu-latest

if: ${{ needs.build.result == 'success' && needs.changes.outputs.packages != '[]' }}

strategy:

fail-fast: false

matrix:

# Parse JSON array containing names of all filters matching any of changed files

service: ${{ fromJSON(needs.changes.outputs.packages) }}

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- name: Deploy ${{ matrix.service }}

run: |

echo "# Your deploy steps for ${{ matrix.service }}"Also, define filter rules in a spesific file under .github folder, e.g., filters.yaml. This defines which folders or files should trigger the pipeline when they are changed.

frontend: src/frontend/**

loadgenerator: src/loadgenerator/**

paymentservice: src/paymentservice/**

productcatalogservice: src/productcatalogservice/**

recommendationservice: src/recommendationservice/**

shippingservice: src/shippingservice/**

currencyservice: src/currencyservice/**

emailservice: src/emailservice/**

adservice: src/adservice/**

cartservice: src/cartservice/**

checkoutservice: src/checkoutservice/**

shoppingassistantservice: src/shoppingassistantservice/**Actions Explanation Details

Trigger (on block)

The pipeline is triggered whenever there is a push to the main branch.

name: Microservices Pipeline

on:

push:

branches: [ main ]Jobs & Steps

It starts with a job called changes. This job uses a GitHub Action called dorny/paths-filter to detect which folders were changed in a commit. I store the filter rules in a separate file called .github/filters.yaml. If someone updates only one service folder, for example src/paymentservice, then only that one will continue to the next steps.

changes:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- uses: dorny/paths-filter@v3

id: filter

with:

filters: .github/filters.yaml

outputs:

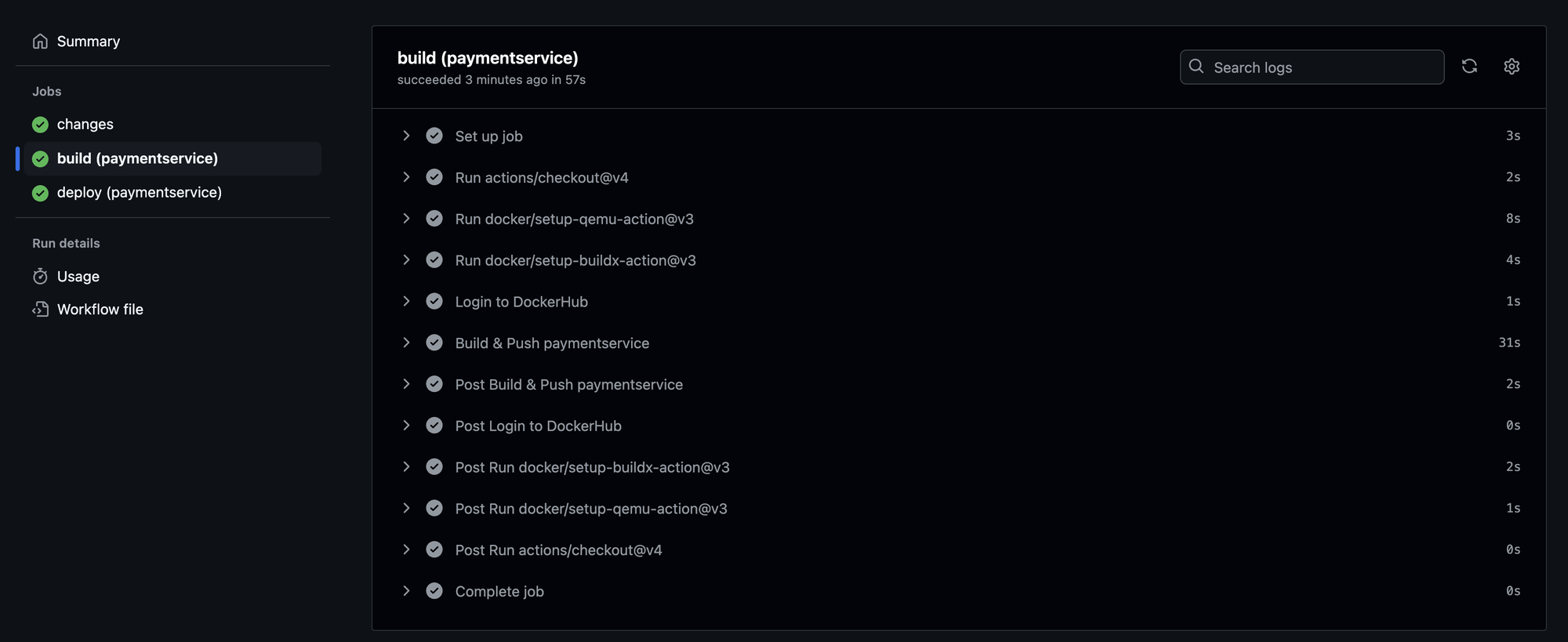

packages: ${{ steps.filter.outputs.changes }}Next is the build job. It will only run if there are changes detected from the previous step. It uses a matrix strategy, so it can build multiple services in parallel if needed. For each changed service, it builds a Docker image and pushes it to DockerHub using docker/build-push-action. To support this, it first sets up Docker Buildx and QEMU, and logs into DockerHub using GitHub Secrets.

build:

needs: changes

runs-on: ubuntu-latest

if: ${{ needs.changes.outputs.packages != '[]' }}

strategy:

fail-fast: false

matrix:

service: ${{ fromJSON(needs.changes.outputs.packages) }}

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- uses: docker/setup-qemu-action@v3

- uses: docker/setup-buildx-action@v3

- name: Login to DockerHub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Build & Push ${{ matrix.service }}

uses: docker/build-push-action@v6

with:

context: src/${{ matrix.service }}

file: src/${{ matrix.service }}/Dockerfile

push: true

tags: |

${{ secrets.DOCKERHUB_USERNAME }}/microservices-demo:${{ matrix.service }}

${{ secrets.DOCKERHUB_USERNAME }}/microservices-demo:${{ matrix.service }}-${{ github.sha }}

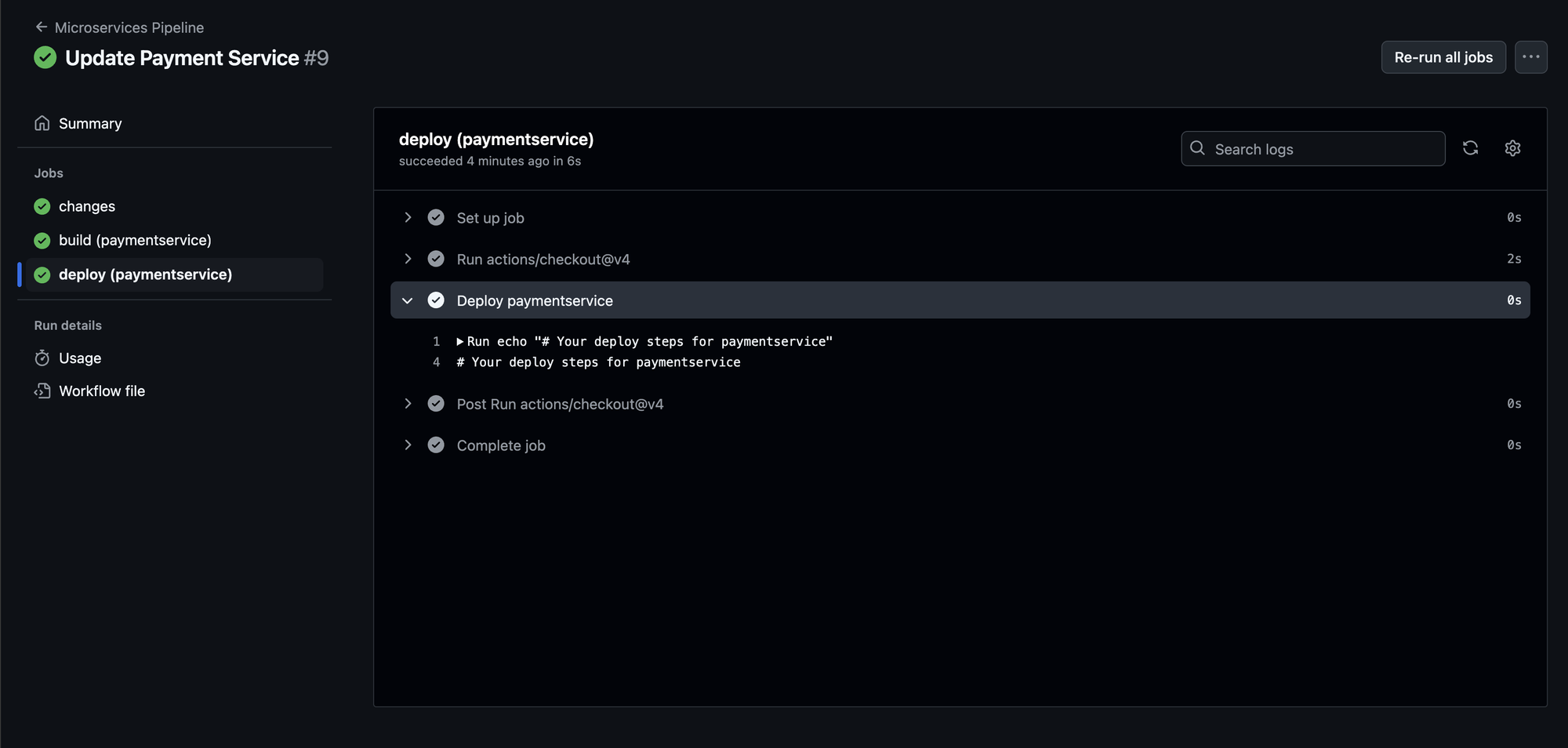

Finally, the deploy job runs only if the build was successful. It also uses a matrix, so it deploys only the services that were changed. In my case, I still use a placeholder for the deployment command, but you can replace it with your real deploy script or command.

deploy:

needs: [changes, build]

runs-on: ubuntu-latest

if: ${{ needs.build.result == 'success' && needs.changes.outputs.packages != '[]' }}

strategy:

fail-fast: false

matrix:

service: ${{ fromJSON(needs.changes.outputs.packages) }}

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- name: Deploy ${{ matrix.service }}

run: |

echo "# Your deploy steps for ${{ matrix.service }}"In both the build and deploy jobs, I use a matrix strategy in GitHub Actions. This allows the workflow to run multiple instances of the same job in parallel, each with a different input. In this case, the input is the name of a microservice that was changed in the latest commit.

strategy:

fail-fast: false

matrix:

service: ${{ fromJSON(needs.changes.outputs.packages) }}

The matrix.service values are dynamically retrieved from the output of the previous changes job. Specifically, in the changes job, the dorny/paths-filter action checks which folders were changed and returns a JSON array of service names under outputs.changes. These service names are passed into the build and deploy jobs via the needs context.

For example, if someone commits a change that only affects src/paymentservice and src/adservice, the output of changes will be:

["paymentservice", "adservice"]Then, the matrix will run the build and deploy jobs separately for each of these services in parallel.

The fail-fast: false option tells GitHub Actions not to cancel all other matrix jobs if one of them fails. This is useful when you want to build or deploy multiple services independently. If one service fails to build or deploy, the others will still continue.

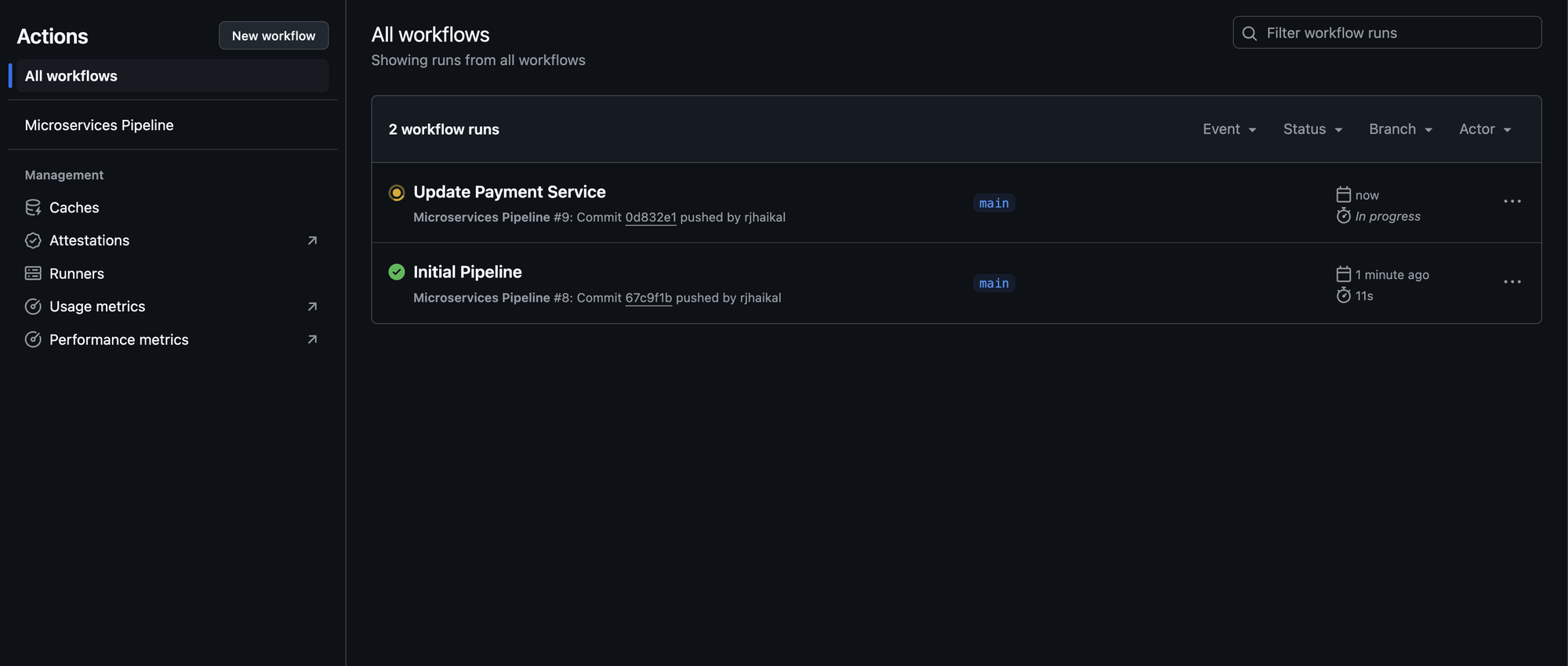

Pipeline in Action

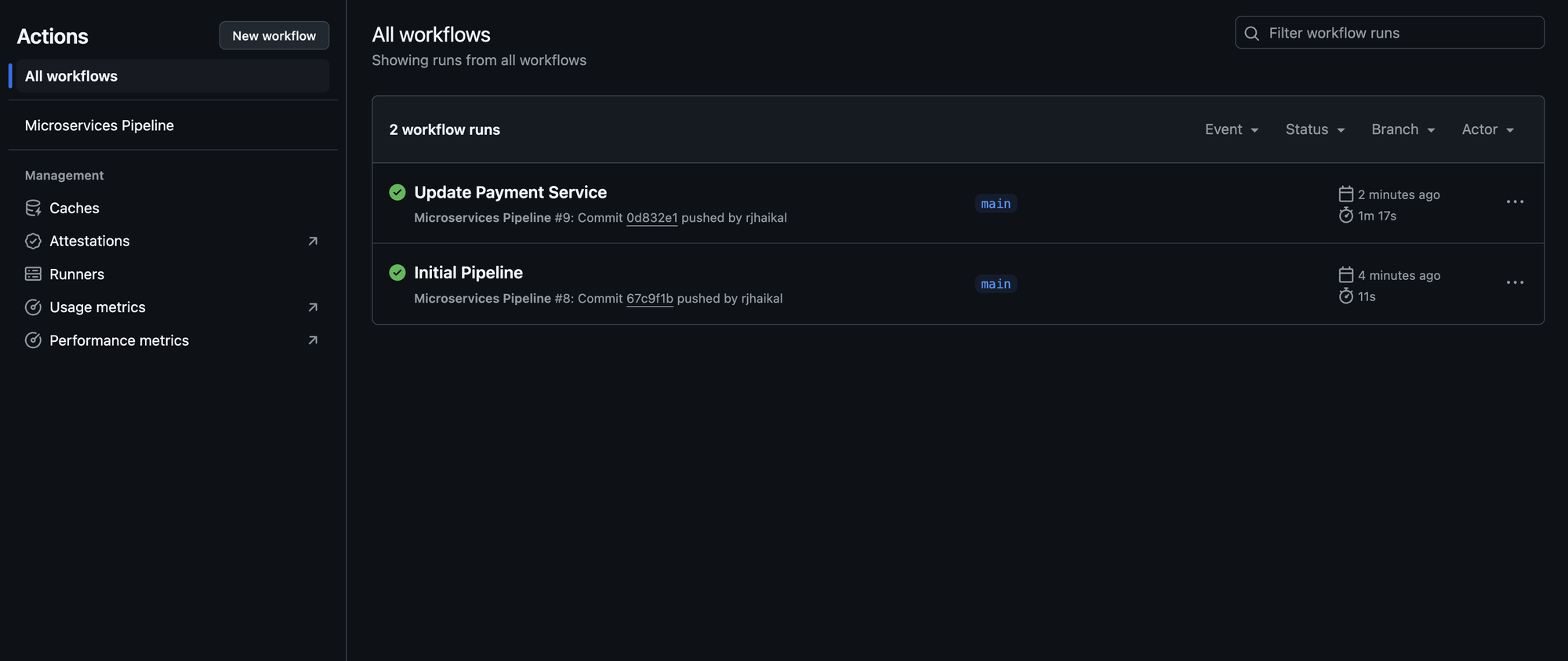

Now it's time to test the pipeline by making a small change inside one of the service folders and pushing it to the main branch. In my case, I added a comment in the paymentservice folder to trigger the workflow.

We can check only paymentservices stage is run and other stage is not run.

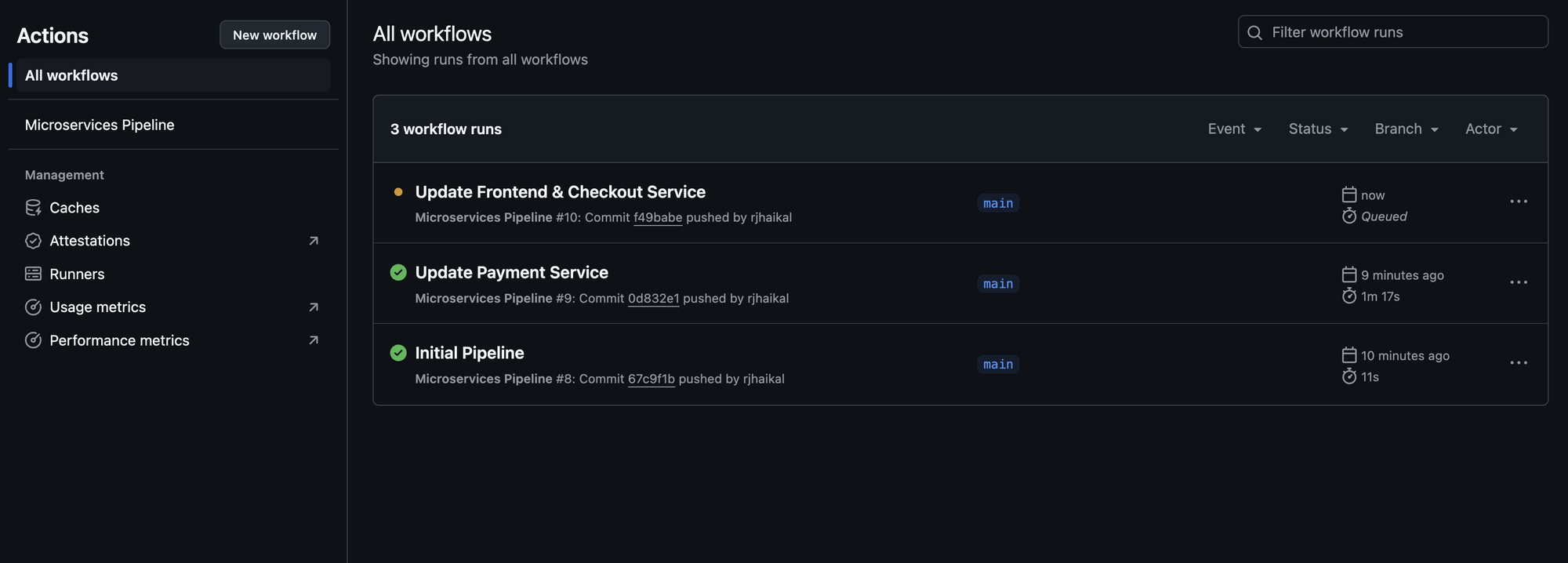

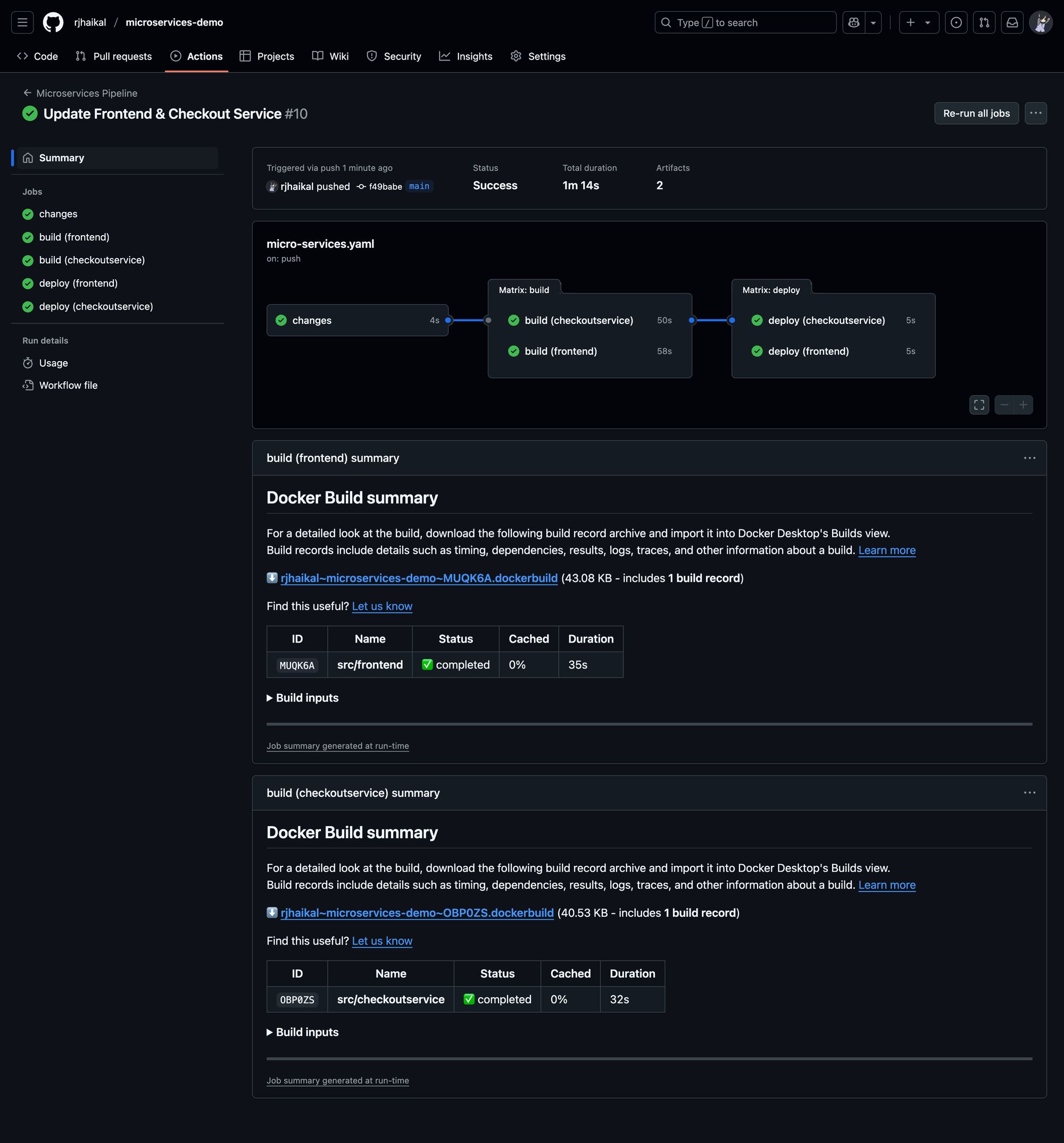

For the next test, I modified two services, frontend and checkoutservice. This helps us ensure that the matrix strategy can handle more than one changed service in a single push.

Everything worked as planned, confirming that the pipeline scales well when multiple services are changed together.

Conslusion

The GitHub Actions pipeline we’ve built is an efficient way to handle deployments in a monorepo setup with multiple microservices. By using the paths-filter action, it accurately detects which service folders were changed, allowing us to trigger builds and deployments only for the affected components.

This approach not only saves time and resources but also keeps the CI/CD process clean and focused. Thanks to the matrix strategy, builds and deployments run in parallel, making the workflow faster and scalable as the number of services grows.

Overall, this setup makes the DevOps pipeline easier to maintain, and it’s a solid pattern for teams working with large, service-oriented repositories.

Reference

🤝 Reach Out to Me

Thanks for reading! If you found this helpful or have any thoughts to share, I’d love to connect with you. You can find me on LinkedIn, let’s share ideas, learn from each other, and grow together ✨